Summary

What do summer blockbusters have to do with network operations? As utilization explodes and legacy tools stagnate, keeping a network secure and performant can feel like a struggle against evil forces. In this post we look at network operations as a hero’s journey, complete with the traditional three acts that shape most gripping tales. Can networks be rescued from the dangers and drudgery of archaic tools? Bring popcorn…

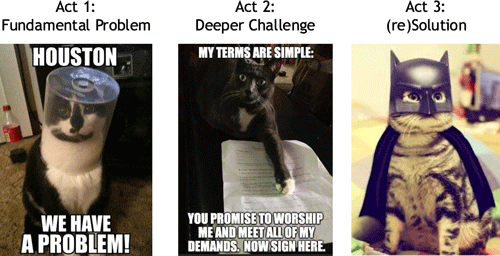

A Summer Blockbuster in Three Acts

Act 1: The Network Traffic Visibility Problem

Networks are delivery systems, like FedEx. What would happen if FedEx didn’t have any package tracking? In a word, chaos. Sadly, most large data networks operate in a similar vacuum of visibility.

- Is it the network?

- What happened after deploying that new app?

- Are we under attack or did we shoot ourselves in the foot?

- How do we efficiently plan and invest in the network?

- How do we start to automate?

Why is it still such a challenge to get actionable information about our networks? Because “package tracking” in a large network is a big data problem, and traditional network management tools weren’t built for that volume of data. As a point of comparison, Fedex and UPS together ship about 20 million packages per day, with an average delivery time of about one day. A large network can easily “ship” nearly 300 billion “packages” (aka traffic flows) per day with an average delivery time of 10 milliseconds. Tracking all those flows is like trying to drink from a fire hose: huge volume at high velocity.

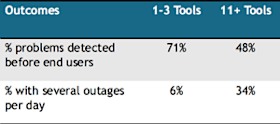

The Red Herring: More Tools & Screens

Act 2: The Deeper Challenge

At this point in the movie, you can’t have all doom and gloom, so there is a ray of light. The good news is that most networks are already generating huge volumes of valuable data that can be used to answer many critical questions. Of course, that in turn brings up the deeper challenge: how on earth can you actually use that massive set of data?

- Data unification: the ability to fuse varied types of data (traffic flow records, BGP, performance metrics, geolocation, custom tags, etc.) into a single consistent format that enables records to be rapidly stored and accessed.

- Deeply granular retention: keep unsummarized details for months, enabling ad hoc answers to unanticipated questions.

- Drillable visibility: unlimited flexibility in grouping, filtering, and pivoting the data.

- Network-specific interface: controls and displays that present actionable insights for network operators who aren’t programmers or data analysts.

- Fast (<10 sec) queries: answers in real operational time frames, so users won’t ignore the data go back to bad old habits.

- Anomaly detection: notify users of any specified set of traffic conditions, enabling rapid response.

- API access: integration via open APIs to allow access to stored data by third-party systems for operational and business functions.

Of course just opening one’s mind to the dream isn’t the same as having the solution. How do you get your hands on a platform offering the capabilities described above? You could try to construct it yourself, for example by building it with open source tools. But you’ll soon find that path leads to daunting challenges. You’ll need a lot of special skills on your team, including network expertise, systems engineers who can build distributed systems, low-level programmers who know how to deal with network protocols, and site reliability engineers (SREs) to build and maintain the infrastructure and software implementation. Even for organizations that have all those resources, it may not be worthwhile to devote them to this particular issue. At this point in the movie, you, as the hero, are truly facing a deeper challenge.

Act 3: Big Data SaaS to the Rescue