Kentik Blog: Network Engineering

AI in network operations is more than chatbots and agents. LLMs make AI easier to use, but the real value comes from the underlying system of telemetry, data pipelines, analytics, ML models, domain knowledge, and workflows that help engineers reason, predict, and act. When designed thoughtfully, AI doesn’t replace engineers. Instead, it augments their expertise and reduces cognitive load across complex network operations.

While AI offers powerful benefits for network operations, building an in-house AI solution presents major challenges, particularly around complex data engineering, staffing specialized roles, and maintaining models over time. The effort required to handle real-time telemetry, retrain models, and manage evolving environments is often too great for most IT teams. For enterprise networks, partnering with a vendor that specializes in AI and network operations is typically a more efficient, scalable, and sustainable approach.

AI can be a transformative tool in network operations — but only when it’s tied to clear, measurable outcomes. Rather than chasing hype, IT and NetOps teams should focus on solving specific operational challenges like reducing MTTR, cutting costs, and stabilizing infrastructure. AI has real potential when strategically applied, and when aligned with business goals, it becomes a powerful ally in modern network operations.

AWS VPC flow Logs and Azure NSG flow Logs offer network traffic visibility with different scopes and formats, but both are essential for multi-cloud network management and security. Unified network observability solutions analyze both in one place to provide comprehensive insights across clouds.

The 2024 EMA Network Megatrends surveyed hundreds of IT professionals about their approach to managing, monitoring, and troubleshooting their networks. In this post, we examine the report’s findings to learn the business and technology trends shaping network operations strategy.

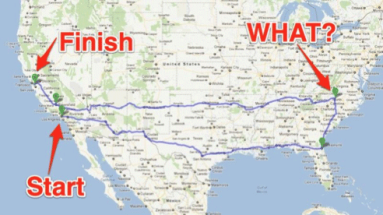

Modern networking relies on the public internet, which heavily uses flow-based load balancing to optimize network traffic. However, the most common network tracing tool known to engineers, traceroute, can’t accurately map load-balanced topologies. Paris traceroute was developed to solve the problem of inferring a load-balanced topology, especially over the public internet, and help engineers troubleshoot network activity over complex networks we don’t own or manage.

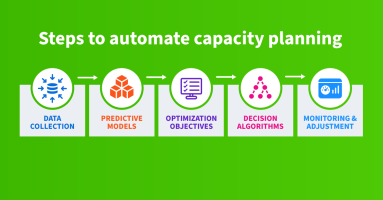

By automating capacity planning for IP networks, we can achieve cost reduction, enhanced accuracy, and better scalability. This process requires us to collect data, build predictive models, define optimization objectives, design decision algorithms, and carry out consistent monitoring and adjustment. However, the initial investment is large and the result will still require human oversight.

In this post, we discuss the crucial differences between resilience versus redundancy in networking. Learn how to optimize your network for seamless performance.

True observability requires visibility into both the application and network layers. For companies reliant on multi-zonal cloud networks, the days of NetOps existing as a team siloed away from application developers are over.

eBPF is a powerful technical framework to see every interaction between an application and the Linux kernel it relies on. eBPF allows us to get granular visibility into network activity, resource utilization, file access, and much more. It has become a primary method for observability of our applications on premises and in the cloud. In this post, we’ll explore in-depth how eBPF works, its use cases, and how we can use it today specifically for container monitoring.

Resiliency is a network’s ability to recover and maintain its performance despite failures or disruptions, and redundancy is the duplication of critical components or functions to ensure continuous operation in case of failure. But how do the two concepts interact? Is doubling up on capacity and devices always needed to keep the service levels up?

What does it mean to build a successful networking team? Is it hiring a team of CCIEs? Is it making sure candidates know public cloud inside and out? Or maybe it’s making sure candidates have only the most sophisticated project experience on their resume. In this post, we’ll discuss what a successful networking team looks like and what characteristics we should look for in candidates.

The advent of various network abstractions has meant many day-to-day networking tasks normally done by network engineers are now done by other teams. What’s left for many networking experts is the remaining high-level design and troubleshooting. In this post, Phil Gervasi unpacks why this change is happening and what it means for network engineers.

A packet capture is a great option for troubleshooting network issues and performing digital forensics, but is it a good option for always-on visibility considering flow data gives us the vast majority of the information we need for normal network operations?

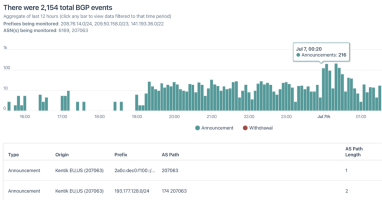

BGP is a critical network protocol, and yet, BGP is often not monitored. BGP issues that go unchecked can turn into major problems. This post explains how Kentik can help you to easily monitor BGP and catch critical issues quickly.

Last week I had the honor to participate in the PTC 2021 conference. Held in Hawaii every January, PTC’s annual conference is the Pacific Rim’s premier telecommunications event. Although this year’s conference was all virtual (no boondoggles to Honolulu!), it was no less important as the theme this year was New Realities. In the following blog post, I summarize what I presented in my PTC panel entitled Strategies to Meet Network Needs.

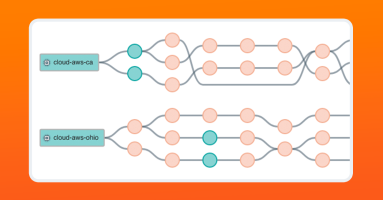

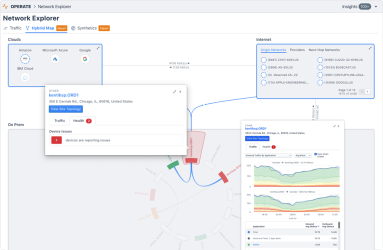

Kentik’s Hybrid Map provides the industry’s first solution to visualize and manage interactions across public/private clouds, on-prem networks, SaaS apps, and other critical workloads as a means of delivering compelling, actionable intelligence. Product expert Dan Rohan has an overview.

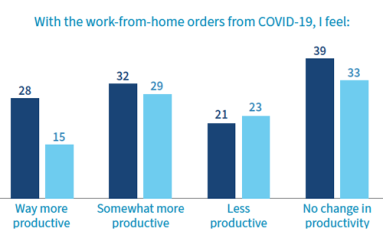

Today we published a report on “The New Normals of Network Operations in 2020.” Based on a survey of 220 networking professionals, our report aims to better understand the challenges this community faces personally and professionally as more companies and individuals taking their worlds almost entirely online.

If you missed Akamai, Uber and Verizon Media talking about networking during COVID-19, read our recap of Kentik’s recent virtual panel. In this post, we share details from the conversation, including observed spikes in traffic growth, network capacity challenges, BC/DR plans, the internet infrastructure supply chain, and more.

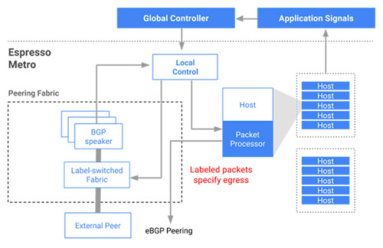

Google is investing and innovating in SD-WAN. In this post, Kentik CTO Jonah Kowall highlights what Google has been up to and how Google’s SD-WAN work can apply to the typical organization.

Kentik recently hosted a virtual panel with network leaders from Dropbox, Equinix, Netflix and Zoom and discussed how they are scaling to accommodate the unprecedented growth in network traffic during COVID-19. In this post, we highlight takeaways from the event.

When more of the workforce shifts to working remotely, it puts new and different strains on the infrastructure across different parts of the network. In this post, we discuss strategies for managing surges in network traffic coming from remote employees and share information on how Kentik can help.

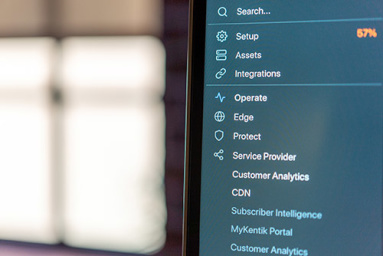

The most complex point of today’s networks is the edge, where there are more protocols, diverse traffic, and security exposure. The network edge is also a place where Kentik provides high value. In this post, we discuss how to implement Kentik in your data center.

Gartner recently published its 2019 version of the Market Guide for AIOps Platforms. In this post, we examine our understanding of the report and discuss how Kentik’s domain-centric AIOps platform is built from the ground up for network professionals.

“My advice to anyone who is considering Kentik is to get into it. Get a trial, import your data, and start playing with it,” says Rick Carter, head of networks at Superloop and Kentik user. In this vlog, Rick shares how Kentik helps Superloop bring connectivity to APAC by uncovering important network and business insights.

Packet is focused on automating single-tenant bare metal compute infrastructure. On a mission to enable the world’s companies with a competitive advantage of infrastructure, Packet turned to Kentik for network visibility and insights.

Kentik CTO Jonah Kowall highlights challenges and opportunites in network automation and describes how Kentik is leading the way in providing next-generation solutions for automation, notification, advanced API integrations with telemetry, and more. “Every organization has an automation goal, and it’s no doubt that network automation is not only essential to avoid costly outages, but also helps organizations scale without putting people in the work path… The problem is that every organization has a storied history of automation tools, meaning we already have at least a dozen of them in our organizations across various silos and stacks, some of which are commercial and some are open source.”

Learn how enterprise video communications leader, Zoom, uses Kentik for network visibility, performance, peering analytics and improved customer support. Zoom’s Alex Guerrero, senior manager of SaaS operations, and Mike Leis, senior network engineer, share how they use Kentik to help Zoom deliver “frictionless meetings.”

At one point, data was called “the new oil.” While that’s certainly an apt description for the insights we can extract from data, most organizations today are finding that new data repositories and “data lakes” often don’t provide the expected benefits due to the analytics challenge. CTO Jonah Kowall explains how advanced data enrichment techniques, leveraging AIOps technologies, can make the promise of data analysis a reality.

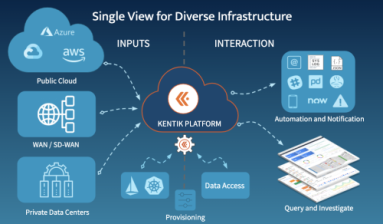

CTO Jonah Kowall introduces Kentik’s AIOps solution for network management, explaining the need for evolution and innovation in the network performance monitoring and diagnostics market and providing an overview of the components of Kentik’s revolutionary platform.

Kentik’s Jim Meehan and Crystal Li report on what they learned from speaking with enterprise network professionals at Cisco Live 2019, and what those attendees expect from a truly modern network analytics solution.

The most commonly used network monitoring tools in enterprises were created specifically to handle only the most basic faults with traditional network devices. CTO Jonah Kowall explains why these tools don’t scale to meet today’s network visibility needs, why more enterprises are moving from faults & packets to flow, and how Kentik can help.

Learn how to maintain multi-cloud visibility within hybrid and multi-cloud architectures, as well as the requirements for modern cloud monitoring tools.

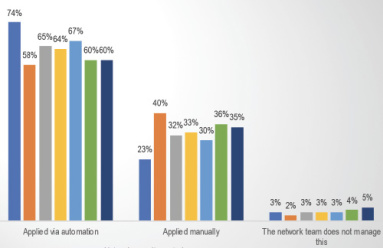

As enterprises integrate their networks with their public cloud strategies, several best practices for network teams are emerging. In this guest blog post, Enterprise Management Associates analyst Shamus McGillicuddy dives into his recent cloud networking research to discuss several strategies that can improve the chances of a successful cloud networking initiative.

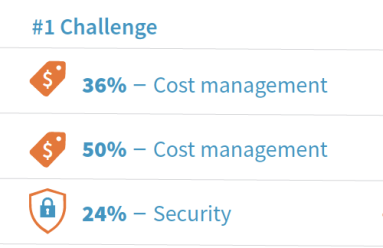

In this post, we provide an overview of a new research report from EMA analyst Shamus McGillicuddy. The report is based on the responses of 250 enterprise IT networking professionals who note challenges and key technology requirements for hybrid and multi-cloud networking.

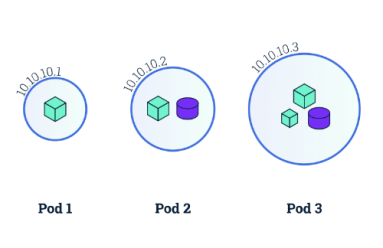

In this blog post, we provide a starting point for understanding the networking model behind Kubernetes and how to make Kubernetes networking simpler and more efficient.

Broadband providers must manage high infrastructure costs on a price-per-bit basis. Visibility into their network performance and security is critical. In this post, we dig into how that led Viasat, a global provider of high-speed satellite broadband services and secure networking systems, to tap Kentik for help.

Building a cloud application is like building a house. If you don’t at least acknowledge the industry’s best practices, it may all come tumbling down. Here we look at AWS’ Well-Architected Framework as a good starting point for building effective cloud applications and outline why having the right tools in place can make all the difference.

Today we released a new report: “AWS Cloud Adoption, Visibility & Management.” The report compiles an analysis based on a survey of 310 executive and technical-level attendees at the recent AWS user conference. Simply put, we found: It’s a multi-cloud, cost-containment world.

In a new report, 2019 Trends in Cloud Transformation, 451 Research analysts dig into seven trends happening amidst the move to the cloud, as well as recommendations, and clear winners and losers for each of the trends. In this post, we provide an overview and a licensed copy of the report for you.

There are five network-related cloud deployment mistakes that you might not be aware of, but that can negate the cloud benefits you’re hoping to achieve. In this post, we provide an overview of each mistake and a guide for avoiding them all.

Music streaming service Pandora recently announced its migration to Google Cloud Platform (GCP). For the NetOps and SecOps teams behind the migration, we know cloud visibility is now more important than ever. That’s why we caught up with Pandora’s James Kelty to tell us how the company plans to maintain visibility across its infrastructure, including GCP, with help from Kentik.

At recent AWS re:Invent, we heard many attendees talking about the push for cloud-native to foster innovation and speed up development. In this post, we take a deeper dive on what it means to be cloud-native, as well as the challenges and how to overcome them.

Cloud providers take away the huge overhead of building, maintaining, and upgrading physical infrastructure. However, many system operators, including NetOps, SREs, and SecOps teams, are facing a huge visibility challenge. Here we talk about how VPC flow logs can help.

To some, moving to the cloud is like a trick. But to others, it’s a real treat. So in the spirit of Halloween, here’s a blog post to break down two of the spookiest (or at least the most common) cloud myths we’ve heard of late.

In this post we look at the difference between NetFlow and sFlow and how network operators can support all of the flow protocols that their networks generate.

With increased business reliance on internet connectivity, the network world has and will continue to get increasingly complex. In this post, we dig into the key findings from our new “State of Network Management in 2018” report. We also discuss why we’re just in the early stages of how our industry will need to transform.

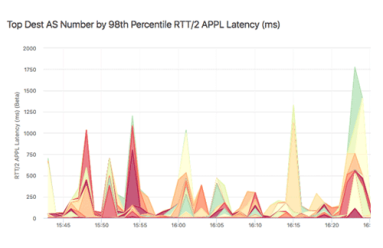

Managing quality of service and service-level agreement (SLAs) is becoming more complex for service providers. In this post, we look at how and why enterprise cloud services and application usage is driving service providers to rethink service assurance metrics. We also discuss why network-based analytics is critical to satisfying service assurance needs.

NetFlow offers a great way to preserve highly useful traffic analysis and troubleshooting details without needing to perform full packet capture. In this post, we look at how NetFlow monitoring solutions quickly evolved as commercialized product offerings and discuss how cloud and big data improve NetFlow analysis.

Digital transformation is not for the faint of heart. In this post, ACG Analyst Stephen Collins discusses why it’s critical for ITOps, NetOPs, SecOPs and DevOps teams to make sure they have the right stuff and are properly equipped for the network visibility challenges they face.

Silos in enterprise IT organizations can inhibit cross-functional synergies, leading to inefficiencies, higher costs and unacceptable delays for detecting and repairing problems. In this post, ACG analyst Stephen Collins examines how IT managers can start planning for now as the first step in moving from silos to synergy.

In this post, we look at the best practices for an effective capacity planning solution that ensures optimal network performance and visibility.

Network performance and network security are increasingly becoming two sides of the same coin. Consequently, enterprise network operations teams are stepping up collaboration with their counterparts in the security group. In this post, EMA analyst Shamus McGillicuddy outlines these efforts based on his latest research.

5G is marching towards commercialization. In this post, we look at the benefits and discuss why network monitoring for performance and security considerations are even more crucial to the operation of hybrid enterprise networks that incorporate 5G network segments.

At that ONUG Spring 2018 event, ACG analyst Stephen Collins moderated a panel discussion on re-tooling IT operations with machine learning and AI. The panelists provided a view “from the trenches.” In this post, Collins shares insights into how panelists’ organizations are applying ML and AI today, each in different operational domains, but with a common theme of overcoming the challenge of managing operations at scale.

Modern enterprise networks are becoming more dynamic and complex, which poses significant challenges for today’s IT leaders. In this post, data center and IT service provider phoenixNAP discusses how Kentik Detect helps overcome network visibility challenges.

Applying AI and machine learning to network infrastructure monitoring allows for closed-loop automation. In this post, ACG analyst Stephen Collins discusses the benefits, including how real-time insights derived from streaming telemetry data are fed back into the orchestration stack to automatically reconfigure the network without operator intervention.

Predictive analytics has improved over the past few years, benefiting from advances in AI and related fields. In this post, we look at how predictive analytics can be used to help network operations. We also dig into the limitations and how the accuracy of the predictions depends heavily on the quality the data collected.

Forward-thinking IT managers are already embracing big data-powered SaaS solutions for application and performance monitoring. If hybrid multi-cloud and hyperscale application infrastructure are in your future, ACG Analyst Stephen Collins’ advice for performance monitoring is “go big or go home.”

While we’re still in the opening phase of the hybrid multi-cloud chess game, ACG analyst Stephen Collins takes a look at what’s ahead. In this post, he digs into what enterprises embracing the cloud can expect in the complex endgame posing many new technical challenges for IT managers.

While data networks are pervasive in every modern digital organization, there are few other industries that rely on them more than the Financial Services Industry. In this blog post, we dig into the challenges and highlight the opportunities for network visibility in FinServ.

Fixing a persistent Internet underlay problem might be as simple as using a higher bandwidth connection or as complex as choosing the right peering and transit networks for specific applications and destination cloud services. In this blog, ACG analyst Stephen Collins advices that to make the best-informed decision about how to proceed, IT managers need to be equipped with tools that enable them to fully diagnose the nature of Internet underlay connectivity problems.

Traditional monitoring tools for managing application performance in private networks are not well-suited to ensuring the performance, reliability and security of SaaS applications. In this post, ACG analyst Stephen Collins makes the case for why enterprise IT managers need to employ a new generation of network visibility and big data analytics tools designed for the vast scale of the Internet.

College, university and K-12 networking and IT teams who manage and monitor campus networks are faced with big challenges today. In this post, we take a deeper look at the challenges and provide requirements for a cost-effective network monitoring solution.

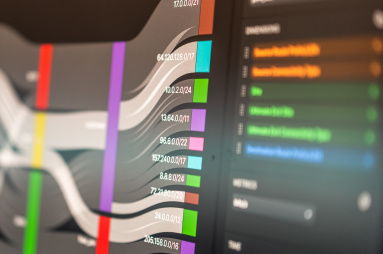

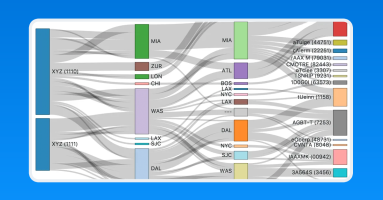

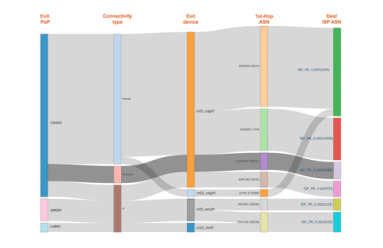

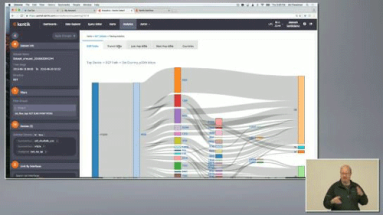

Sankey diagrams were first invented by Captain Matthew Sankey in 1898. Since then they have been adopted in a number of industries such as energy and manufacturing. In this post, we will take a look at how they can be used to represent the relationships within network data.

Kentik Detect now incorporates IP reputation data from Spamhaus, enabling users to identify infected or compromised hosts. In this post we look at the types of threat feed information we use from Spamhaus, and then dive into how to use that information to reveal problem hosts on an ad hoc basis, to generate scheduled reports about infections, and to set up alerting when your network is found to have carried compromised traffic.

While defining digital transformation strategies is a valuable exercise for C-level executives, CIOs and IT managers also need to adopt a pragmatic and more tactical approach to “going digital.” That starts with acquiring tools and building the new skills needed to ensure business success and profitability in the face of digital disruption. In this post, ACG Principal Analyst Stephen Collins looks at how to manage it all.

A new EMA report on network analytics is full of interesting takeaways, from reasons for deployment to use cases after analytics are up and running. In this post, we look specifically at the findings around adoption to see why and how organizations are leveraging network analytics.

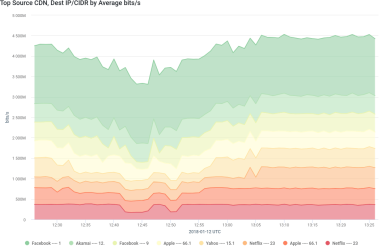

CDNs have been around for years, but they’ve gained new importance with the rise of video streaming services like Netflix and Hulu. As traffic from those sites soars, CDNs introduce new challenges for network operations teams at both service providers and enterprises. Kentik Detect’s new CDN Attribution makes identifying and tracking CDN traffic a whole lot easier. In this blog, we provide examples of how companies can implement this functionality.

As IoT adoption continues, enterprises are finding a massive increase in the number of devices and the data volumes generated by these devices on their networks. Here’s how enterprises can use network monitoring tools for enhanced visibility into complex networks.

From telcos, to financial services, to tech companies, we asked 30 of our peers one question: What are your 2018 networking predictions? Yes, it’s a broad question. But respondents (hailing from network, data center, and security operations teams) surfaced five main predictions for the year ahead.

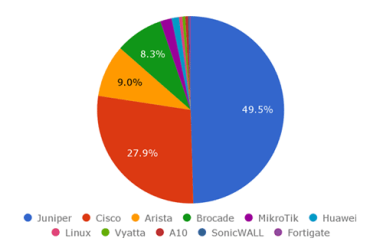

What brands of network devices are Kentik customers using? Where does their international traffic come from and go to? What’s the current norm for packet sizes and internet traffic protocols? Drawing on Kentik Detect’s ability to see and analyze network traffic, this post shares some intriguing factoids, and it sheds light on some of the insights about your own network traffic that await you as a Kentik customer.

Advances in open source software packages for big data have made Do-It-Yourself (DIY) approaches to Network Flow Analyzers attractive. However, careful analysis of all the pros and cons needs to be completed before jumping in. In this post, we look at the hidden pitfalls and costs of the DIY approach.

Media reports tell us that Cyber Monday marked a single-day record for revenue from online shopping. We can assume that those sales correlated with a general spike in network utilization, but from a management and planning perspective we might want to go deeper, exploring the when and where of traffic patterns to specific sites. In this post we use Kentik Detect to see what can be learned from a deeper dive into holiday traffic.

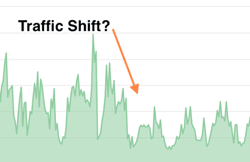

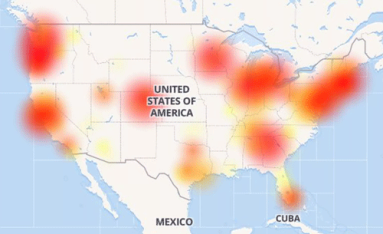

As last week’s misconfigured BGP routes from backbone provider Level 3 caused Internet outages across the nation, the monitoring and troubleshooting capabilities of Kentik Detect enabled us to identify the most-affected providers and assess the performance impact on our own customers. In this post we show how we did it and how our new ability to alert on performance metrics will make it even easier for Kentik customers to respond rapidly to similar incidents in the future.

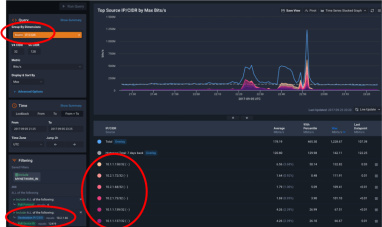

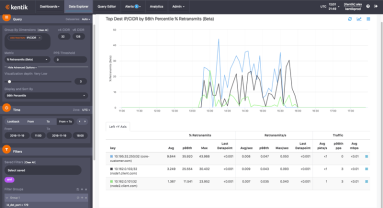

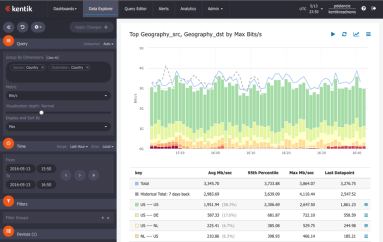

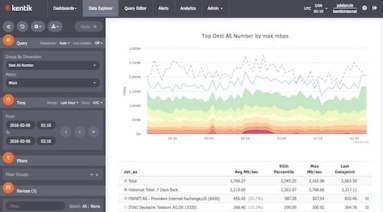

Managing network capacity can be a tough job for Network Operations teams at Service Providers and Enterprise IT. Most legacy tools can’t show traffic traversing the network and trend that data over time. In this post, we’ll look at how Kentik Detect changes all that with new Dashboards, Analytics, and Alerts that enable fast, easy planning for capacity changes and upgrades.

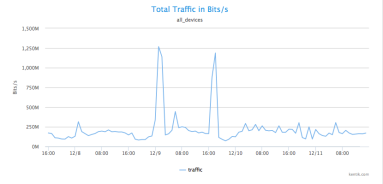

At Kentik, we built Kentik Detect, our production SaaS platform, on a microservices architecture. We also use Kentik for monitoring our own infrastructure. Drawing on a variety of real-life incidents as examples, this post looks at how the alerts we get — and the details that we’re able to see when we drill down deep into the data — enable us to rapidly troubleshoot and resolve network-related issues.

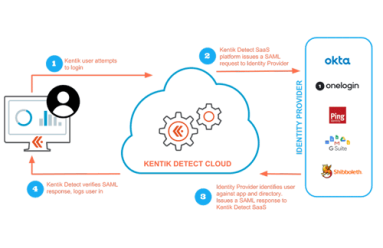

As security threats grow more ominous, security procedures grow more onerous, which can be a drag on productivity. In this post we look at how Kentik’s single sign-on (SSO) implementation enables users to maintain security without constantly entering authentication credentials. Check out this walk-through of the SSO setup and login process to enable your users to access Kentik Detect with the same SSO services they use for other applications.

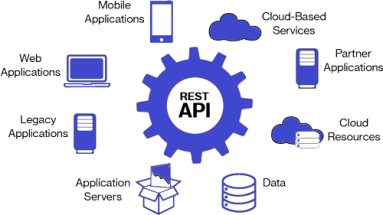

In today’s world of heterogeneous environments and distributed systems, APIs drive synergistic innovation, creating a whole that’s more powerful than the parts. Even in networking, where the CLI rules, APIs are now indispensable. At Kentik, APIs have been integral to our platform from the outset. In this post we look at how partners and customers are expanding the capabilities of their systems by combining Kentik with external tools.

In our latest post on Interface Classification, we look beyond what it is and how it works to why it’s useful, illustrated with a few use cases that demonstrate its practical value. By segmenting traffic based on interface characteristics (Connectivity Type and Network Boundary), you’ll be able to easily see and export valuable intelligence related to the cost and ROI of carrying a given customer’s traffic.

With much of the country looking skyward during the solar eclipse, you might wonder how much of an effect there was on network traffic. Was there a drastic drop as millions of watchers were briefly uncoupled from their screens? Or was that offset by a massive jump in live streaming and photo uploads? In this post we report on what we found using forensic analytics in Kentik Detect to slice traffic based on how and where usage patterns changed during the event.

Domain Name Server (DNS) is often overlooked, but it’s one of the most critical pieces of Internet infrastructure. As driven home by last October’s crippling DDoS attack against Dyn, the web can’t function unless DNS resolves hostnames to their underlying IP addresses. In this post we look at how combining Kentik’s software host agent with Dashboards in Kentik gives you the tools you need to ensure DNS availability and performance.

Kentik addresses the day-to-day challenges of network operations, but our unique big network data platform also generates valuable business insights. A great example of this duality is our new Interface Classification feature, which streamlines an otherwise-tedious technical task while also giving sales teams a real competitive advantage. In this post we look at what it can do, how we’ve implemented it, and how to get started.

Major cyber-security incidents keep on coming, the latest being the theft from HBO of 1.5 terabytes of private data. We often frame Kentik Detect’s advanced anomaly detection and alerting system in terms of defense against DDoS attacks, but large-scale transfer of data from private servers to unfamiliar destinations also creates anomalous traffic. In this post we look at several ways to configure our alerting system to see breaches like the attack on HBO.

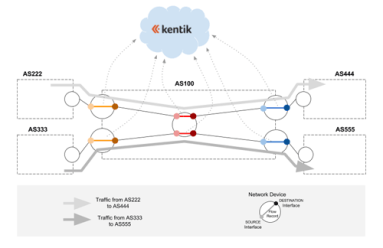

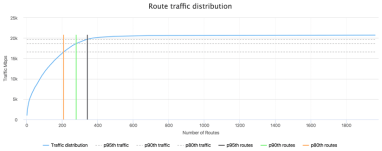

With BGP and NetFlow correlated into a unified datastore, Kentik Detect’s advanced analytics provide valuable insights for both engineering and sales. In this post we look into a fairly recent addition to Kentik Detect, Route Traffic Analytics. Especially useful for capacity planners and peering coordinators, RTA makes it easy to see how many unique routes are represented in a given percent of your traffic, which indicates the route capacity needed in your edge routers.

Can BGP routing tables provide actionable insights for both engineering and sales? Kentik Detect correlates BGP with flow records like NetFlow to deliver advanced analytics that unlock valuable knowledge hiding in your routes. In this post, we look at our Peering Analytics feature, which lets you see whether your traffic is taking the most cost-effective and performant routes to get where it’s going, including who you should be peering with to reduce transit costs.

Among Kentik Detect’s unique features is the fact that it’s a high-performance network visibility solution that’s available as a SaaS. Naturally, data security in the cloud can be an initial concern for many customers, but most end up opting for SaaS deployment. In this post we look at some of the top factors to consider in making that decision, and why most customers conclude that there’s no risk to taking advantage of Kentik Detect as a SaaS.

What do summer blockbusters have to do with network operations? As utilization explodes and legacy tools stagnate, keeping a network secure and performant can feel like a struggle against evil forces. In this post we look at network operations as a hero’s journey, complete with the traditional three acts that shape most gripping tales. Can networks be rescued from the dangers and drudgery of archaic tools? Bring popcorn…

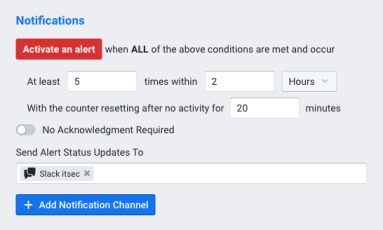

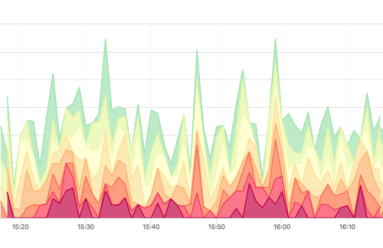

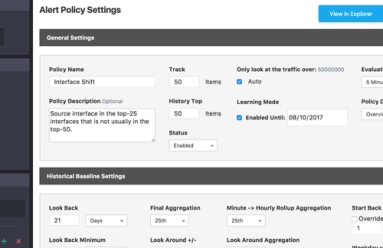

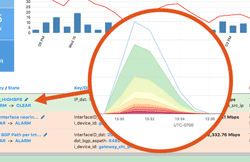

Operating a network means staying on top of constant changes in traffic patterns. With legacy network monitoring tools, you often can’t see these changes as they happen. Instead you need a comprehensive visibility solution that includes real-time anomaly detection. Kentik Detect fits the bill with a policy-based alerting system that continuously evaluates incoming flow data. This post provides an overview of system features and configuration.

As one of 2017’s hottest networking technologies, SD-WAN is generating a lot of buzz, including at last week’s Cisco Live. But as enterprises rely on SD-WAN to enable Internet-connected services — thereby bypassing Carrier MPLS charges — they face unfamiliar challenges related to the security and availability of remote sites. In this post we take a look at these new threats and how Kentik Detect helps protect against and respond to attacks.

Obsolete architectures for NetFlow analytics may seem merely quaint and old-fashioned, but the harm they can do to your network is no fairy tale. Without real-time, at-scale access to unsummarized traffic data, you can’t fully protect your network from hazards like attacks, performance issues, and excess transit costs. In this post we compare three database approaches to assess the impact of system architecture on network visibility.

NetFlow data has a lot to tell you about the traffic across your network, but it may require significant resources to collect. That’s why many network managers choose to collect flow data on a sampled subset of total traffic. In this post we look at some testing we did here at Kentik to determine if sampling prevents us from seeing low-volume traffic flows in the context of high overall traffic volume.

Telecom and mobile operators are clear on both the need and the opportunity to apply big data for advanced operational analytics. But when it comes to being data driven, many telecoms are still a work in progress. In this post we look at the state of this transformation, and how cloud-aware big data solutions enable telecoms to escape the constraints of legacy appliance-based network analytics.

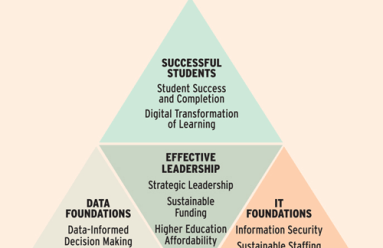

In higher education, embracing the cloud enhances your ability to achieve successful outcomes for students, researchers, and the organization as a whole. But just as in business, this digital transformation can succeed only if it’s anchored by modern network visibility. In this post we look at the network as more than mere plumbing, identifying how big data network intelligence helps realize high-priority educational goals.

Stuck with piles of siloed tools, today’s network teams struggle to piece together both the big picture and the actionable insights buried in inconsistent UIs and fragmented datasets. The result is subpar performance for both networks and network teams. In this post we look at the true cost of legacy tools, and how Kentik Detect frees you from this obsolete paradigm with a unified, scalable, real-time solution built on the power of big data.

Large or small, all ISPs share the imperative to stay competitive and profitable. To do that in today’s environment, they need traffic visibility they can’t get from legacy network tools. Taking their lead from the world’s most-successful web-scale enterprises, ISPs have much to gain from big data network and business intelligence, so in this post we look at ISP use cases and how Kentik Detect’s SaaS model puts key capabilities within easy reach.

SDN holds lots of promise, but it’s practical applications have so far been limited to discrete use cases like customer provisioning or service scaling. The long-term goal is true dynamic control, but that requires comprehensive traffic intelligence in real time at full scale. As our customers are discovering, Kentik Detect’s traffic visibility, anomaly detection, and extensive APIs make it an ideal source for actionable traffic data that can drive network automation.

Without package tracking, FedEx wouldn’t know how directly a package got to its destination or how to improve service and efficiency. 25 years into the commercial Internet, most service providers find themselves in just that situation, with no easy way to tell where an individual customer’s traffic exited the network. With Kentik Detect’s new Ultimate Exit feature, those days are over. Learn how Kentik’s per-customer traffic breakdown gives providers a competitive edge.

NPM appliances and difficult-to-scale enterprise software deployments were appropriate technology for their day. But 15 years later, we’re well into the era of the cloud, and legacy NPM approaches are far from the best available option. In this post we look at why it’s high time to sunset the horse-and-buggy NPM systems of yesteryear and instead take advantage of SaaS network traffic intelligence powered by big data.

Most of the testing and discussion of flow protocols over the years has been based on enterprise use cases and fairly low-bandwidth assumptions. In this post we take a fresh look, focusing instead on the real-world traffic volumes handled by operators of large-scale networks. How do NetFlow and other variants of stateful flow tracking compare with packet sampling approaches like sFlow? Read on…

It’s very costly to operate a large-scale Internet edge, making lower-end edge routers a subject of keen interest for service providers and Web enterprises alike. Such routers are comparatively short on FIB capacity, but depending on the routes needed to serve your customers that might not be an issue. How can you find out for sure? In this post, Alex Henthorn-Iwane, VP Product Marketing, explains how a new feature in Kentik Detect can show you the answer.

Not long ago network flow data was a secondary source of data for IT departments trying to better understand their network status, traffic, and utilization. Today it’s become a leading focus of analysis, yielding valuable insights in areas including network security, network optimization, and business processes. In this post, senior analyst Shamus McGillicudy of EMA looks at the value and versatility of flow for network analytics.

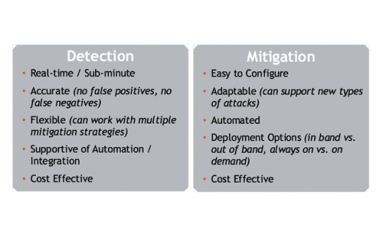

Today’s increased reliance on cloud and distributed application architectures means that denial of just a single critical dependency can shut down Web availability and revenue. In this post we look at what that means for large, complex enterprises. Do legacy tools protect sufficiently against new and different vulnerabilities? If not, what constitutes a modern approach to DDoS protection, and why is it so crucial to business resilience?

After presenting at the recent CIOArena conference in Atlanta, Kentik VP of Strategic Alliances Jim Frey came away with a number of insights about the adoption of digital business operations in the enterprise. In his first in a series of related posts, Jim looks at audience survey responses indicating how reliant enterprises — even those that aren’t digital natives or located in tech industry hotspots — have become on the Internet for core elements of their business.

Kentik is pleased to announce our membership in the Internet2® consortium, which operates a nationwide research and education (R&E) network and establishes best practices for R&E networking. Because Internet2 is a major source of innovation, our participation will enable us to grow our connection to the higher education networking community, to learn from member perspectives, and to support the advancement of applications and services for R&E networks.

DDoS attacks constitute a very significant and growing portion of the overall cybersecurity threat. In this post we recap highlights of a recent Webinar jointly presented by Kentik’s VP of Product Marketing, Alex Henthorn-Iwane, and Forrester Senior Analyst Joseph Blankenship. The Webinar focused on three areas: attack trends, the state of defense techniques, and key recommendations that organizations can implement to improve their protective posture.

As the architecture of digital business applications transitions toward the cloud, network teams are increasingly involved in assuring application performance across distributed infrastructure. Filling this new role effectively requires a deeper toolset than provided by APM alone, with both internal and external network-level visibility. In this post from EMA’s Shamus McGillicudy, we look at how modern NPM solutions empower network managers to tackle these new challenges.

As IT technologies evolve toward greater reliance on the cloud, longstanding networking practitioners are adapting to a new environment. The changes are easier to implement in greenfield companies than in more established brownfield enterprises. In this third post of a three-part series, analyst Jim Metzler talks with Kentik’s Alex Henthorn-Iwane about how network management is impacted by the differences between the two situations.

Cisco’s late-January acquisition of AppDynamics confirms what was already evident from Kentik’s experience in 2016, which is that effective visibility is now recognized industry-wide as a critical requirement for success. AppDynamics provides APM, the full value of which can’t be realized without the modern NPM offered by Kentik Detect. In this post we look at how Kentik uniquely complements APM to provide a comprehensive visibility solution.

With end-of-life coming this summer for Peakflow 7.5, many Arbor Networks customers face another round of costly upgrades to older, soon-to-be-unsupported appliance hardware. In this post we look at how Arbor users pay coming and going to continue with appliance-based DDoS detection, and we consider whether that makes sense given the availability of a big data SaaS like Kentik Detect.

As cloud computing continues to gain ground, there’s a natural tension in IT between cloud advocates and those who prefer the status quo of in-house networking. In part two of his three-part series on this “culture war,” analyst Jim Metzler clarifies what is — and is not — involved in the transition to the cloud, and how the adoption of cloud computing impacts the way that network organizations should think about the management tools they use.

Unless you’re a Tier 1 provider, IP transit is a significant cost of providing Internet service or operating a digital business. To minimize the pain, your network monitoring tools would ideally show you historical route utilization and notify you before the traffic volume on any path triggers added fees. In this post we look at how Kentik Detect is able to do just that, and we show how our Data Explorer is used to drill down on the details of route utilization.

Kentik is honored to be the sole network monitoring provider named by Forrester Research as a “Breakout Vendor” in a December 2016 report on the Virtual Network Infrastructure (VNI) space. The report asserts that I&O leaders can dramatically improve customer experience by choosing cloud networking solutions, and cites Kentik Detect as one of four groundbreaking products that are poised to supercede typical networking incumbents.

Avi Freedman recently spoke with Ethan Banks and Greg Ferro of PacketPushers about Kentik’s latest updates, which focus primarily on features that enhance network performance monitoring and DDoS protection. This post includes excerpts from that conversation as well as a link to the full podcast. Avi discusses his vision of appliance-free network monitoring, explains how host monitoring expands Kentik’s functionality, and gives an overview of how we detect and respond to anomalies and attacks.

Kentik’s recent recognition as an IDC Innovator for Cloud-Based Network Monitoring was based not only on our orientation as a cloud-based SaaS but also on the deep capabilities of Kentik Detect. In this post we look at how our purpose-built distributed architecture enables us to keep up with raw network traffic data while providing a unified network intelligence solution, including traffic analysis, performance monitoring, Internet peering, and DDoS protection.

Every so often a fundamental shift in technology sets off a culture war in the world of IT. Two decades ago, with the advent of a commercial Internet, it was a struggle between the Bellheads and the Netheads. Today, Netheads have become the establishment and cloud computing advocates are pushing to upend the status quo. In this first post of a 3-part series, analyst Jim Metzler looks at how this dynamic is playing out in IT organizations.

“NetFlow” may be the most common short-hand term for network flow data, but that doesn’t mean it’s the only important flow protocol. In fact there are three primary flavors of flow data — NetFlow, sFlow, and IPFIX — as well as a variety of brand-specific names used by various networking vendors. To help clear up any confusion, this post looks at the main flow-data protocols supported by Kentik Detect.

How does Kentik NPM help you track down network performance issues? In this post by Jim Meehan, Director of Solutions Engineering, we look at how we recently used our own NPM solution to determine if a spike in retransmits was due to network issues or a software update we’d made on our application servers. You’ll see how we ruled out the software update, and were then able to narrow the source of the issue to a specific route using BGP AS Path.

Destination-based Remotely Triggered Black-Hole routing (RTBH) is an incredibly effective and very cost-effective method of protecting your network during a DDoS attack. And with Kentik’s advanced Alerting system, automated RTBH is also relatively simple to configure. In this post, Kentik Customer Success Engineer Dan Rohan guides us through the process step by step.

As organizations increasingly rely on digital operations there’s no end in sight to the DDoS epidemic. That aggravates the headaches for service providers, who stand between attackers and their targets, but it also creates the opportunity to offer effective protection services. Done right, these services can deepen customer relationships while expanding revenue and profits. But to succeed, providers will need to embrace big data as a key element of DDoS protection.

The source of DDoS attacks is typically depicted as a hoodie-wearing amateur. But the more serious threat is actually a well-developed marketplace for exploits, with vendors whose state-of-the-art technology can easily overwhelm legacy detection systems. In this post we look why you need the firepower of big data to fend off this new breed of commercial attackers.

Whether its 70s variety shows or today’s DDoS attacks, high-profile success begets replication. So the recent attack on Dyn by Mirai-marshalled IoT botnets won’t be the last severe disruption of Internet access and commerce. Until infrastructure stakeholders come together around meaningful, enforceable standards for network protection, the security and prosperity of our connected world remains at risk.

DDoS attacks pose a serious and growing threat, but traditional DDoS protection tools demand a plus-size capital budget. So many operators rely instead on manually-triggered RTBH, which is stressful, time-consuming, and error-prone. The solution is Kentik’s automated RTBH triggering, based on the industry’s most accurate DDoS detection, that sets up in under an hour with no hardware or software install.

Can legacy DDoS detection keep up with today’s attacks, or do inherent constraints limit network protection? In this post Jim Frey, Kentik VP Strategic Alliances, looks at how the limits of appliance-based detection systems contribute to inaccuracy — both false negatives and false positives — while the distributed big data architecture of Kentik Detect significantly enhances DDoS defense.

There’s a horrible tragedy taking place every day wherever legacy visibility systems are deployed: the needless slaughter of innocent NetFlow data records. NetFlow is cruelly disposed of, and the details it can reveal are blithely ignored in favor of shallow summaries. Kentik VP of Marketing Alex Henthorn-Iwane reports on this pressing issue and explains what each of us can do to help Save the NetFlow.

In this post, based on a webinar presented by Jim Frey, VP Strategic Alliances at Kentik, we look at how changes in network traffic flows — the shift to the Cloud, distributed applications, and digital business in general — has upended the traditional approach to network performance monitoring, and how NPM is evolving to handle these new realities.

Kentik recently launched Kentik NPM, a network performance monitoring solution for today’s digital business. Integrated into Kentik Detect, Kentik NPM addresses the visibility gaps left by legacy appliances, using lightweight software agents to gather performance metrics from real traffic. This podcast with Kentik CEO Avi Freedman explores Kentik NPM as a game-changer for performance monitoring.

As Gartner Research Director Sanjit Ganguli pointed out last May, network performance monitoring appliances that were designed for the era of WAN-connected data centers leave significant blind spots when monitoring modern applications that are distributed across the cloud. In this post Jim Frey, VP Strategic Alliances, explores Ganguli’s analysis and explains how Kentik NPM closes the gaps.

Network performance is mission-critical for digital business, but traditional NPM tools provide only a limited, siloed view of how performance impacts application quality and user experience. Solutions Engineer Eric Graham explains how Kentik NPM uses lightweight distributed host agents to integrate performance metrics into Kentik Detect, enabling real-time performance monitoring and response without expensive centralized appliances.

Cisco Live 2016 gave us a chance to meet with BrightTalk for some video-recorded discussions on hot topics in network operations. This post focuses on the first of those videos, in which Kentik’s Jim Frey, VP Strategic Alliances, talks about the complexity of today’s networks and how Big Data NetFlow analysis helps operators achieve timely insight into their traffic.

It was a blast taking part in our first ever Networking Field Day (NFD12), presenting our advanced and powerful network traffic analysis solution. Being at NFD12 gave us the opportunity to get valuable response and feedback from a set of knowledgeable network nerd and blogger delegates. See what they had to say about Kentik Detect…

The recorded music market used to be dependent on physical objects to distribute recordings to buyers. Now it’s as if our headphone cords stretch all the way from our smartphones to the datacenter. That makes network performance and availability mission-critical for music services — and anyone else who serve ads, processes transactions, or delivers content. Which explains why some of the world’s top music services use Kentik Detect for network traffic analysis.

BGP used to be primarily of interest only to ISPs and hosting providers, but it’s become something with which all network engineers should get familiar. In this conclusion to our four-part BGP tutorial series, we fill in a few more pieces of the puzzle, including when — and when not — it makes sense to advertise your routes to a service provider using BGP.

Kentik CEO Avi Freedman joins Ethan Banks and Chris Wahl of the PacketPushers Datanauts podcast to discuss hot topics in networking today. The conversation covers the trend toward in-house infrastructure among enterprises that generate revenue from their IP traffic, the pluses and minuses of OpenStack deployments for digital enterprises, and the impact of DevOps practices on network operations.

In this post we continue our look at BGP — the protocol used to route traffic across the interconnected Autonomous Systems (AS) that make up the Internet — by clarifying the difference between eBGP and iBGP and then starting to dig into the basics of actual BGP configuration. We’ll see how to establish peering connections with neighbors and to return a list of current sessions with useful information about each.

BGP is the protocol used to route traffic across the interconnected Autonomous Systems (AS) that make up the Internet, making effective BGP configuration an important part of controlling your network’s destiny. In this post we build on the basics covered in Part 1, covering additional concepts, looking at when the use of BGP is called for, and digging deeper into how BGP can help — or, if misconfigured, hinder — the efficient delivery of traffic to its destination.

In most digital businesses, network traffic data is everywhere, but legacy limitations on collection, storage, and analysis mean that the value of that data goes largely untapped. Kentik solves that problem with post-Hadoop Big Data analytics, giving network and operations teams the insights they need to boost performance and implement innovation. In this post we look at how the right tools for digging enable organizations to uncover the value that’s lying just beneath the surface.

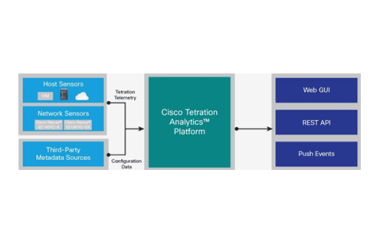

Cisco’s recently announced Tetration Analytics platform is designed to provide large and medium data centers with pervasive real-time visibility into all aspects of traffic and activity. Analyst Jim Metzler says that the new platform validates the need for a Big Data approach to network analytics as network traffic grows. But will operations teams embrace a hardware-centric platform, or will they be looking for a solution that’s highly scalable, supports multiple vendors, doesn’t involve large up-front costs, and is easy to configure and use?

Cisco’s announcement of Tetration Analytics means that IT and network leaders can no longer ignore the need for Big Data intelligence. In this post, Kentik CEO Avi Freedman explains how that’s good for Kentik, which has been pushing hard for this particular industry transition. Cisco’s appliance-based approach is distinct from the SaaS option that most customers choose for Kentik Detect, but Tetration’s focus on Big Data analytics provides important validation that Kentik is on the right track.

Network security depends on comprehensive, timely understanding of what’s happening on your network. As explained by information security executive and analyst David Monahan, among the key value-adds of Kentik Detect are the ways in which it enables network data to be applied — without add-ons or additional charges — to identify and resolve security issues. Monahan provides two use cases that illustrate how the ability to filter out and/or drill-down on dimensions such as GeoIP and protocol can tip you off to security threats.

CIOs focus on operational issues like network and application performance, uptime, and workflows, while CISOs stress about malware, access control, and data exfiltration. The intersection of these concerns is the network, so it seems evident that CIOs and CISOs should work together instead of clashing over budgets and tools. In this post, network security analyst David Monahan makes the case for finding a solution that can keep both departments continuously and comprehensively well-informed about infrastructure, systems, and applications.

This guest post brings a security perspective to bear on network visibility and analysis. Information security executive and analyst David Monahan underscores the importance of being able to collect and contextualize information in order to protect the network from malicious activity. Monahan explores the capabilities needed to support numerous network and security operations use cases, and describes Kentik Detect as a next-generation flow analytics solution with high performance, scalability, and flexibility.

Today’s MSPs frequently find themselves without the insights needed to answer customer questions about network performance and security. To maintain customer confidence, MSPs need answers that they can only get by pairing infrastructure visibility with traffic analysis. In this post, guest contributor Alex Hoff of Auvik Networks explains how a solution combining those capabilities enables MSPs to win based on customer service.

Intelligent use of network management data can enable virtually any company to transform itself into a successful digital business. In our third post in this series, we look at areas where traditional network data management approaches are falling short, and we consider how a Big Data platform that provides real-time answers to ad-hoc queries can empower IT organizations and drive continuous improvement in both business and IT operations.

Traffic can get from anywhere to anywhere on the Internet, but that doesn’t mean all networks are directly connected. Instead, each network operator chooses the networks with which to connect. Both business and technical considerations are involved, and the ability to identify prime candidates for peering or transit offers significant competitive advantages. In this post we look at the benefits of intelligent interconnects and how networks can find the best peers to connect with.

The network data collected by Kentik Detect isn’t limited to portal-only access; it can also be queried via SQL client or using Kentik’s RESTful APIs. In this how-to, we look how service providers can use our Data Explorer API to integrate traffic graphs into a customer portal, creating added-value content that can differentiate a provider from its competitors while keeping customers committed and engaged.

Looking ahead to tomorrow’s economy, today’s savvy companies are transitioning into the world of digital business. In this post — the second of a three-part series — guest contributor Jim Metzler examines the key role that Big Data can play in that transformation. By revolutionizing how operations teams collect, store, access, and analyze network data, a Big Data approach to network management enables the agility that companies will need to adapt and thrive.

Kentik Detect’s backend is Kentik Data Engine (KDE), a distributed datastore that’s architected to ingest IP flow records and related network data at backbone scale and to execute exceedingly fast ad-hoc queries over very large datasets, making it optimal for both real-time and historical analysis of network traffic. In this series, we take a tour of KDE, using standard Postgres CLI query syntax to explore and quantify a variety of performance and scale characteristics.

NetFlow and IPFIX use templates to extend the range of data types that can be represented in flow records. sFlow addresses some of the downsides of templating, but in so doing takes away the flexibility that templating allows. In this post we look at the pros and cons of sFlow, and consider what the characteristics might be of a solution can support templating without the shortcomings of current template-based protocols.

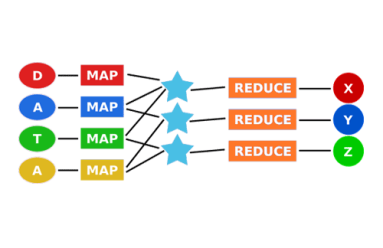

As the first widely accessible distributed-computing platform for large datasets, Hadoop is great for batch processing data. But when you need real-time answers to questions that can’t be fully defined in advance, the MapReduce architecture doesn’t scale. In this post we look at where Hadoop falls short, and we explore newer approaches to distributed computing that can deliver the scale and speed required for network analytics.

NetFlow and its variants like IPFIX and sFlow seem similar overall, but beneath the surface there are significant differences in the way the protocols are structured, how they operate, and the types of information they can provide. In this series we’ll look at the advantages and disadvantages of each, and see what clues we can uncover about where the future of flow protocols might lead.

The plummeting cost of storage and CPU allows us to apply distributed computing technology to network visibility, enabling long-term retention and fast ad hoc querying of metadata. In this post we look at what network metadata actually is and how its applications for everyday network operations — and its benefits for business — are distinct from the national security uses that make the news.

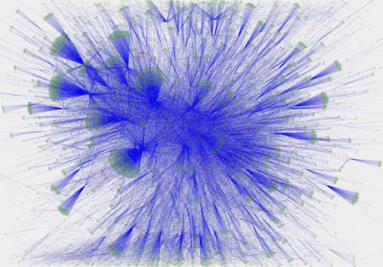

In part 2 of this series, we look at how Big Data in the cloud enables network visibility solutions to finally take full advantage of NetFlow and BGP. Without the constraints of legacy architectures, network data (flow, path, and geo) can be unified and queries covering billions of records can return results in seconds. Meanwhile the centrality of networks to nearly all operations makes state-of-the-art visibility essential for businesses to thrive.

Clear, comprehensive, and timely information is essential for effective network operations. For Internet-related traffic, there’s no better source of that information than NetFlow and BGP. In this series we’ll look at how we got from the first iterations of NetFlow and BGP to the fully realized network visibility systems that can be built around these protocols today.

Border Gateway Protocol (BGP) is a policy-based routing protocol that has long been an established part of the Internet infrastructure. Understanding BGP helps explain Internet interconnectivity and is key to controlling your own destiny on the Internet. With this post we kick off an occasional series explaining who can benefit from using BGP, how it’s used, and the ins and outs of BGP configuration.

By actively exploring network traffic with Kentik Detect you can reveal attacks and exploits that you haven’t already anticipated in your alerts. In previous posts we showed a range of techniques that help determine whether anomalous traffic indicates that a DDoS attack is underway. This time we dig deeper, gathering the actionable intelligence required to mitigate an attack without disrupting legitimate traffic.

Kentik Detect is powered by Kentik Data Engine (KDE), a massively-scalable distributed HA database. One of the challenges of optimizing a multitenant datastore like KDE is to ensure fairness, meaning that queries by one customer don’t impact performance for other customers. In this post we look at the algorithms used in KDE to keep everyone happy and allocate a fair share of resources to every customer’s queries.

With massive data capacity and analytical flexibility, Kentik Detect makes it easy to actively explore network traffic. In this post we look at how to use this capability to rapidly discover and analyze interesting and potentially important DDoS and other attack vectors. We start with filtering by source geo, then zoom in on a time-span with anomalous traffic. By looking at unique source IPs and grouping traffic by destination IP we find both the source and the target of an attack.

If your network visibility tool lets you query only those flow details that you’ve specified in advance then you’re likely vulnerable to threats that you haven’t anticipated. In this post we’ll explore how SQL querying of Kentik Detect’s unified, full-resolution datastore enables you to drill into traffic anomalies, to identify threats, and to define alerts that notify you when similar issues recur.

Kentik Detect handles tens of billions of network flow records and many millions of sub-queries every day using a horizontally scaled distributed system with a custom microservice architecture. Instrumentation and metrics play a key role in performance optimization.

The team at Kentik recently tweeted: “#Moneyball your network with deeper, real-time insights from #BigData NetFlow, SNMP & BGP in an easy to use #SaaS.” There are a lot of concepts packed into that statement, so we thought it would be worth unpacking for a closer look.

For many of the organizations we’ve all worked with or known, SNMP gets dumped into RRDTool, and NetFlow is captured into MySQL. This arrangement is simple, well-documented, and works for initial requirements. But simply put, it’s not cost-effective to store flow data at any scale in a traditional relational database.

Taken together, three additional attributes of Kentik Detect — self-service, API-enabled, and multi-tenant — further enhance the fundamental advantages of Kentik’s cloud-based big data approach to network visibility.