Summary

Kubernetes is a powerful platform for large-scale distributed systems, but out of the box, it doesn’t address all the needs of complex enterprise systems deployed across multiple clouds and private data centers. Service meshes fill that gap by connecting multiple clusters into a cohesive mesh.

As today’s enterprises shift to the cloud, Kubernetes has emerged as the de facto platform for running containerized microservices. And while Kubernetes operates as a single cluster, enterprises inevitably run their applications on a complex, often confusing, architecture of multiple clusters deployed to a hybrid of multiple cloud providers and private data centers.

This approach creates a lot of problems. How do your services find each other? How do they communicate securely? How do you enforce access and communication policies? How do you troubleshoot and monitor health?

Even on a single cluster, these are not trivial concerns. In a multi- or hybrid-cloud setup, the complexity can be overwhelming.

In this post, we’ll explore how service meshes — specifically, multi-cluster service meshes — can help solve these challenges.

Let’s first look at how multi-cluster connectivity works in Kubernetes.

Understanding Kubernetes multi-cluster connectivity

Kubernetes operates in terms of clusters. A cluster is a set of nodes that run containerized applications.

Every cluster has two components:

-

A control plane that is responsible for maintaining the cluster (what is running, what images are used). This control plane also contains the API server used to communicate within the cluster.

-

A data plane that is made up of one or more worker nodes. These nodes run the actual applications. Nodes are made up of pods and their containers. Pods are groups of small, deployable units of computing.

When running a single cluster, this all works well. The challenge comes with multiple clusters because the API server in the control plane is only aware of the nodes, pods, and services in its own cluster.

So, when you run multiple Kubernetes clusters and want workloads to talk to one another across clusters, Kubernetes (alone) can’t help you. You need some sort of networking solution to connect your Kubernetes clusters.

There are two main models for multi-cluster connectivity: global flat IP address space and gateway-based connectivity. Let’s look at each.

Global flat IP address space

In this model, each pod across all Kubernetes clusters has its own unique private IP address that is kept in a central location and known to all other clusters. The networks of all the clusters are peered together to form a single conceptual network. Various technologies — such as cross-cloud VPN or interconnects — may be needed when connecting clusters across clouds. Using this model requires tight control over the networks and subnets used by all Kubernetes clusters. The engineering team will need to centrally manage the global IP space to ensure there are no IP address conflicts.

Gateway-based connectivity

In this model, each cluster has its own private IP address space that is not exposed to other clusters. Multiple clusters can use the same private IP addresses. Each cluster also has its own gateway (or load balancer), which knows how to route incoming requests to individual services within the cluster. The gateway has a public IP address, and communication between clusters always goes through the gateway. This adds one extra hop to every cross-cluster communication.

Let’s look now at how these models apply within the context of service meshes in general and then with cross-cloud service meshes in particular.

The service mesh: a brief primer

A service mesh is a software layer that monitors and controls traffic over a network. Just like with Kubernetes, the service mesh has a data plane and a control plane.

The data plane consists of proxies running alongside every service. These proxies intercept every request between services in the network, apply any relevant policies, decide how to handle the request, and—if the request is approved and authorized—decide how to route it.

The data plane can follow one of two models for these proxies: the sidecar model or the host model. With the sidecar model, a mesh proxy is attached as a sidecar container to every pod. With the host mode, every node runs an agent that serves as the proxy for all workloads running on the node.

The control plane exposes an API that the mesh operator uses to configure policies governing the behavior of the mesh. The control plane also routes communications by discovering all the data plane proxies and updating them with the locations of services throughout the entire mesh.

What is a cross-cloud service mesh?

A cross-cluster service mesh is a service mesh that connects workloads running on different Kubernetes clusters — and potentially standalone VMs. When connecting those clusters across multiple cloud providers — we now have a cross-cloud service mesh. However, from a technical standpoint, there is little difference between a cross-cluster and a cross-cloud service mesh.

The cross-cloud service mesh brings all the benefits of a general service mesh but with the additional benefit of offering a single view into multiple clouds. There are two primary deployment models, and they’re analogous to the multi-cluster connectivity models discussed above.

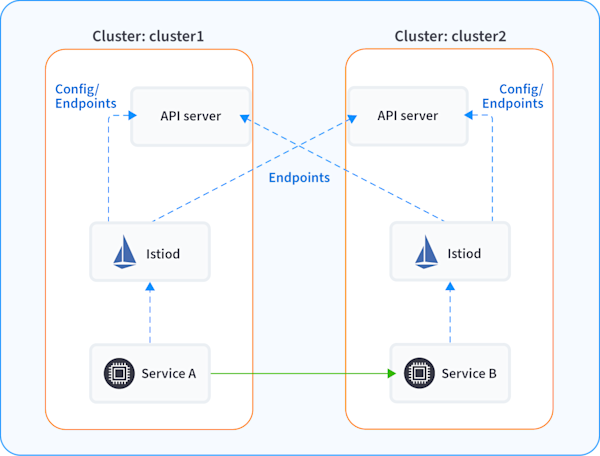

The first model is a single conceptual network. In practice, that network could be composed of multiple, peered physical networks. Multiple clusters share the same global private IP address space, and every pod has a unique private IP address across all clusters. The following diagram shows an Istio mesh deployment across two clusters that belong to the same conceptual network.

Services in cluster1 can directly talk to services in cluster2 via private IP addresses.

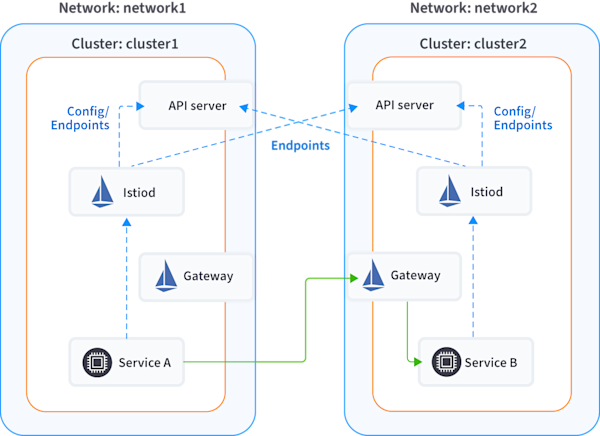

The second model is a federation of isolated clusters in which the internal IP addresses of each cluster are not exposed to the other clusters. Each cluster exposes a gateway, and services in one cluster talk to services in other clusters through the gateway. The following diagram shows an Istio mesh deployment across two clusters that belong to two separate networks.

Services within a cluster can talk to one another directly using their private IP addresses. However, communication between clusters must go through a gateway with a public IP address.

Benefits of using a cross-cloud service mesh with Kubernetes

As stated in the opening, understanding the enterprise’s overall networking and security posture and all its components is very demanding. Cross-cloud service meshes help you by allowing you to connect large-scale enterprise systems in complex ways. This creates many benefits.

First, a cross-cloud service mesh provides a one-stop shop for observability, visualization, health checks, policy management, and policy enforcement. Offloading these concerns — such as authentication, authorization, metrics collections, and health checks — to a central component (the cross-cloud service mesh) is a big deal. Think about it as “aspect-oriented programming in the cloud.”

Second, this approach allows an organization to realize the vision of microservices by focusing on the business logic of its applications and services. Teams can pick the best tool for the job and write their microservices in any programming language, while the service mesh combines them all to form a cohesive whole. There is no need to develop, maintain, and upgrade multiple client libraries in each supported programming language just to facilitate the complex networking side of being in a multi-cloud environment.

And third, a cross-cloud service mesh allows organizations to deploy their services to cloud providers that make the most sense for them based on cost, convenience, or other factors. Instead of being locked into a single vendor, organizations can use cross-cloud service meshes to connect all of their clusters and services together, regardless of the cloud that each one runs in.

Service mesh examples

There are several successful service meshes. Here are some of the most mature and popular services mesh projects:

Every one of these service meshes can be set up for multi-cluster environments, allowing enterprises to use them as cross-cloud service meshes.

Conclusion

Kubernetes is a powerful platform for large-scale distributed systems, but out of the box, it doesn’t address all the needs of complex enterprise systems deployed across multiple clouds and private data centers. Service meshes fill that gap by connecting multiple clusters into a cohesive mesh.

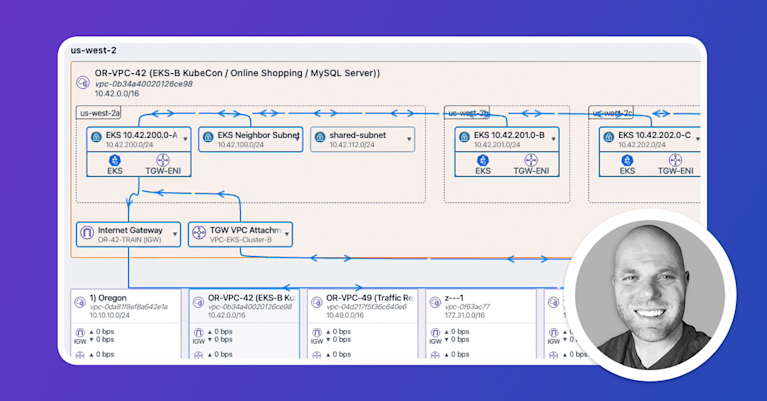

While a service mesh helps your enterprise with cross-cloud connectivity, gaining a clear picture of everything you’re running across your clouds is still challenging. That’s where Kentik Cloud steps in to help. Kentik Cloud gives you the tools you need for monitoring, visualization, optimizing, and troubleshooting your network — whether you run multi-cloud or hybrid-cloud, and whether you use Kubernetes or non-Kubernetes deployments. As your systems grow in scale and spread across more clusters and clouds, the ability to see it all through a single pane of glass is critical.

Read about Kentik’s multi-cloud solutions for more details.