Kentik Blog

As one of 2017’s hottest networking technologies, SD-WAN is generating a lot of buzz, including at last week’s Cisco Live. But as enterprises rely on SD-WAN to enable Internet-connected services — thereby bypassing Carrier MPLS charges — they face unfamiliar challenges related to the security and availability of remote sites. In this post we take a look at these new threats and how Kentik Detect helps protect against and respond to attacks.

Verizon’s deal with Yahoo may still be on the minds of many, but Disney may be Verizon’s new big brand-of-interest. Also on acquisitions, Forrester’s CEO says Apple should buy IBM. Juniper Research released a list of the most promising 5G operators in Asia. And the UN released a list of countries with cybersecurity gaps. More after the jump…

Obsolete architectures for NetFlow analytics may seem merely quaint and old-fashioned, but the harm they can do to your network is no fairy tale. Without real-time, at-scale access to unsummarized traffic data, you can’t fully protect your network from hazards like attacks, performance issues, and excess transit costs. In this post we compare three database approaches to assess the impact of system architecture on network visibility.

This week Cisco made a series of product announcements, including intent-based networking for automation. NTT launched an SD-WAN. An open Amazon S3 server exposed millions of voters’ PII. A group proposed a way to reduce BGP route leaks. More after the jump…

NetFlow data has a lot to tell you about the traffic across your network, but it may require significant resources to collect. That’s why many network managers choose to collect flow data on a sampled subset of total traffic. In this post we look at some testing we did here at Kentik to determine if sampling prevents us from seeing low-volume traffic flows in the context of high overall traffic volume.

The NSA officially blamed North Korea for the WannaCry ransomware attacks. A Virginia school got creative to keep its students on fast broadband. Ericsson predicts a 5G user spike. The tabs versus spaces programmer debate continues. And the Kentik team advises on avoiding AWS downtime and offers skills for DevOps engineers to know. All that and more after the jump…

Telecom and mobile operators are clear on both the need and the opportunity to apply big data for advanced operational analytics. But when it comes to being data driven, many telecoms are still a work in progress. In this post we look at the state of this transformation, and how cloud-aware big data solutions enable telecoms to escape the constraints of legacy appliance-based network analytics.

The meaning of SDN changed. That is, if you’re working in Cisco’s Security Business. It means “security-defined networking,” which is where they’re focusing. SD-WAN is still hogging the spotlight, but CenturyLink says it’s “no quick fix.” Meanwhile, containers are a big part of AT&T’s network strategy. “Not everything is suited for virtual machines,” said AT&T’s CTO. More after the jump…

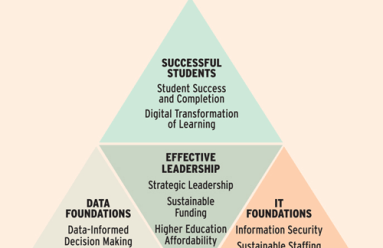

In higher education, embracing the cloud enhances your ability to achieve successful outcomes for students, researchers, and the organization as a whole. But just as in business, this digital transformation can succeed only if it’s anchored by modern network visibility. In this post we look at the network as more than mere plumbing, identifying how big data network intelligence helps realize high-priority educational goals.

In headlines this week, investor Mary Meeker released her annual “Internet Trends” report, which includes internet growth across regions. SD-WAN is not top-of-mind for IT professionals, according to a new survey. And open source networking tools may be getting better, but there’s still a lot of challenges with them. More after the jump…