Speeding Up the Web: A Comprehensive Guide to Content Delivery Networks and Embedded Caching

Summary

Content delivery networks are an important part of the internet, as they ensure a short path between content and the consumers. The idea of placing CDN caches inside ISPs networks was created early in the days of CDNs. The number of CDNs with this offering is growing and ISPs all over the world take advantage of the idea. This post explains how this works and what to look out for to do it right.

What is a CDN?

Content distribution networks are a type of network that emerged in the 90s, early in the internet’s history, when content on the internet grew “richer” – moving from text to images and video. Akamai was one of the first CDNs and remains a strong player in today’s market.

A high-level definition of a CDN is:

- A collection of geographically distributed caches

- A method to place content on the caches

- A method to steer end users to the closest caches

The purpose of a CDN is to place the data-heavy content or latency-sensitive content as close to the content consumers as possible – in whatever sense close means. (More on that later.)

Most website publishers use CDN services to deliver their sites and applications to ensure reliable and responsive performance for their end users. CDN services just make sense. If a publisher in the U.S. has many visitors from Africa, it isn’t efficient to be sending that content across multiple terrain and submarine cables to reach the end user every time it’s requested. It makes logical sense to store this content locally when first requested and then serve it to subsequent African viewers locally. End users get a higher-quality viewing experience, and it reduces expensive traffic on those backbone networks.

What is embedding?

The need to have the content close to the content consumers fostered the idea of placing caches belonging to the CDN inside the access network’s network border. This idea was novel and still challenges the mindset of network operators today.

We call such caches “embedded caches.” They are usually intended only to serve end users in that network. These caches often use address space originating in the ISP’s ASN and not in the CDN’s.

Who are the players?

Online video viewership really increased the demand for CDN services. Internet video consumption started in the late 1990s, and OTT streaming services have accelerated the growth in online video consumption. In the early days, specialized video CDNs such as Mark Cuban’s Broadcast.com (acquired by Yahoo) and INTERVU (acquired by Akamai) served the market. By the early 2000s, CDNs had to have a video delivery solution to compete. In 2023, work-from-home and e-learning applications continue to drive video consumption growth.

The CDN market has only become more active. Traditional players like Akamai, Lumen, Tata, and Edgio still generate a large share of their business from traditional content delivery, but these services have become commoditized. For these players to grow their business and compete with newer entrants like Cloudflare, Fastly, and Stackpath, they are leveraging their distributed infrastructure to offer a more diverse and specialized set of services like security services and DDoS protection, high-performance real-time delivery services, as well as moving into public cloud services.

Large content producers who use commercial CDNs often use multiple CDNs. This supports different types of content, leverages different geographical strengths, creates resilience, and, finally – means better leverage when negotiating contracts, thanks to utilizing more than one provider.

Giant content producers like Netflix, Apple, Microsoft, Amazon, Facebook, and Google have all built their own specialized CDN to support their core services. Some also compete in the marketplace selling CDN services – including Amazon, Google, and Microsoft. In 2023, CDNs are looking for ways to leverage their investment in their distributed infrastructure by identifying high-growth services traditionally offered by public clouds. CDNs are now offering storage and compute services. Akamai recently closed on acquiring Linode to add cloud compute to its service offerings.

The major CDNs that offer an embedded solution to ISPs are, among others:

- Akamai

- Netflix

- Amazon

- Cloudflare

- CDN77

- Microsoft

- Apple

- Qwilt

In the SIGCOMM ‘21 presentation, “Seven years in the life of Hypergiants’ off-nets,” Petros Gigis and team found that more than 4,500 networks globally in 2021 had embedded caches from at least one of the CDNs – a number that has tripled from 2013 to 2021. Google, Netflix, Facebook, and Akamai are by far the most widely deployed embedded caches, with almost all of the 4,500 networks hosting at least one and often two or more of the four.

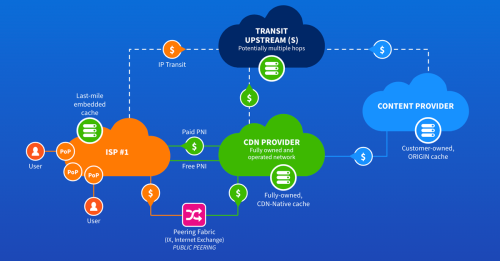

The benefit of embedding

The benefit of the CDN is that the content served by the CDN can be placed closer to the consumer. For the ISP, the benefit is primarily savings on the internet edge – in the form of transit and capacity costs. Depending on the type of embedded deployment, some ISPs can also save capacity on their internal network. Traffic from CDNs rarely creates revenue for the ISP as most CDNs prefer peering over buying transit from end-user ISPs, so it does become crucial to use as little of the network as possible to deliver the traffic to consumers.

Advanced edge optimization strategies for network engineers, planners, and peering pros

The downside of embedding

The challenge for ISPs is the added complexity of operating caches managed by other networks inside their network border. On top of that, space and cooling needs differ from what their own equipment needs. Complicating matters further is that these embedded caches’ space and cooling needs differ from one CDN to the next.

Another reported downside is that some embedded CDNs’ operational processes are misaligned with the ISPs’ operational processes. Expectations of access to the caches or speed in physical replacements are sometimes misaligned.

Offload – what to expect

A common surprise for ISPs new to embedded CDN caches is that they rarely see 100% of the traffic from the CDN served from the embedded caches. There are several reasons why we see some traffic from the CDN over the network edges.

To understand this, let’s examine the ways content is placed on the embedded caches (or the caches in the CDN in general).

Different ways of placing content and embedding – illustrated by typical traffic profiles from select Kentik customers

A few CDNs – most prominently Netflix Open Connect, push the content to the caches in the CDN. A frequent calculation determines which files should be placed where and the system then distributes the files during low-demand hours, providing two significant benefits:

- The fill traffic runs on network connections outside of peak hours, lowering the strain on the ISP’s interconnection capacity.

- The caches’ resources can be fully used to serve traffic in peak demand hours, allowing no resources to store new content files being used during these times.

For ISP partners, this means they will see the fill traffic during the night, and if they agree to let their embedded caches fill from each other, the amount of file downloads to the caches from outside the network is minimal, as you can see in the example below.

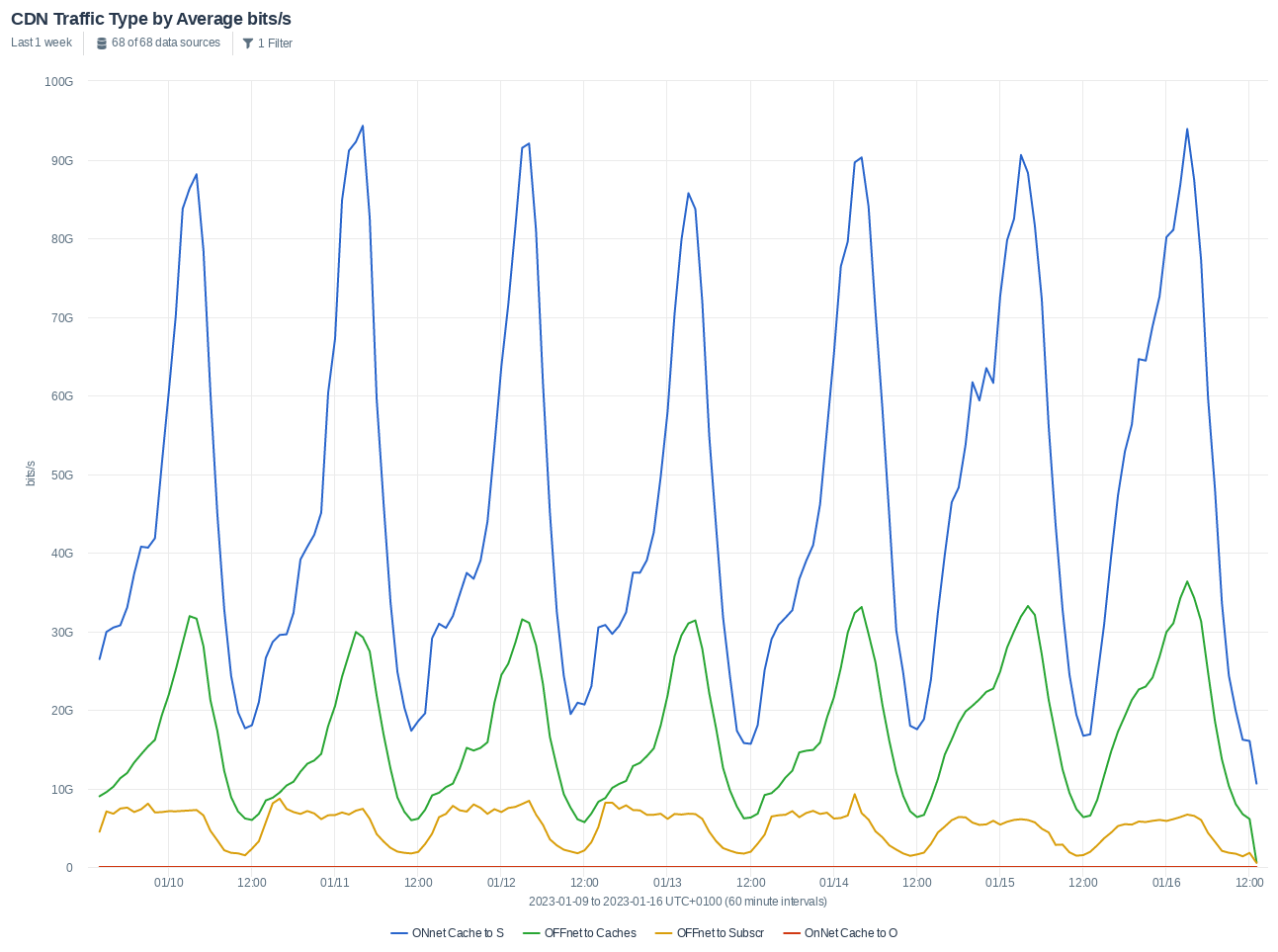

The graph below shows the traffic profile of Netflix traffic for a network with a well-dimensioned Open Connect deployment. The fill traffic over the network border is the smallest spike, with the cache-to-cache fill having a more considerable spike at night.

Notice the large spikes in the peak hours of traffic served from the embedded caches. In the graph, we can see a traffic peak from outside of the network to the end users during the peak hours. This long tail is the content that was not placed on the embedded caches because the expected demand was not high enough. The total catalog is too extensive for the amount of disk space in a typically embedded cluster, so some content will need to be served from the larger POPs of the CDN.

When the CDN uses pull to place the content, the end user’s request for content triggers the content to be downloaded to the embedded cache. The traffic from outside the network to the embedded caches peaks at the peak hour for the content download to end users. Like before, a long tail of content is served directly to the end users from outside the network. This is most often content that the content owners decided wasn’t worth caching, as high demand was not expected. It might be more dynamic content, such as content personalized for the end viewer or with a specific security profile. Finally, some CDNs will serve the file directly to the end user and then subsequently store it on the embedded caches after the first request for future delivery.

So deploying embedded caches from the major CDN sources of the inbound traffic can save ISPs significant traffic and capacity on the network edge. Not all the traffic, but most of the traffic, can be moved away.

The next question that arises is where should an ISP deploy embedded caches? Ideally, a cache would be placed at each location where the customers’ lines connect to the IP network. This should remove most CDN traffic from the network links, right?

Well, that depends on the network topology of the network but also on which CDN and how that CDN maps the end users to a cluster.

Let’s look at the most common methods and how they affect the traffic flows from the embedded caches to end users inside the network.

End-user mapping

Getting end users to the closest cache

Again we have Open Connect from Netflix standing out from most of the other players. They heavily rely on BGP (Border Gateway Protocol, the protocol that networks use to exchange routes) to define which cache an end user is directed to.

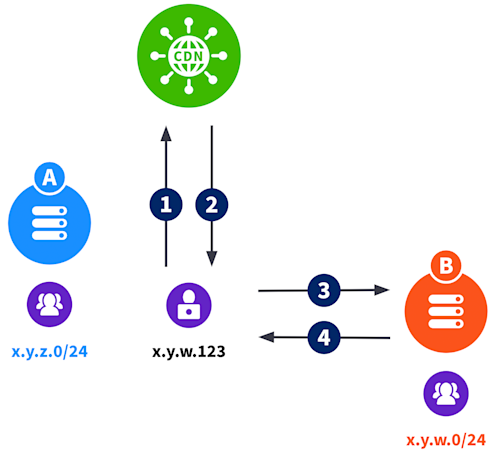

BGP:

- BGP is used to signal to a cache which IP ranges it should serve

- A setup where all caches have identical announcements will work for most deployments since the next tie-breaker is the geolocation information for the end user’s IP address.

- Prefixes are sent from the ISP to the caches.

• x.y.z.0/24 is announced to A

• x.y.w.0/24 is announced to B

1. Give me movie

2. Go to B and get movie

3. Give me movie

4. Movie

Open Connect is unique among the CDNs since they do not rely on the DNS system to direct the end user to the suitable cache. The request to play a movie is made to the CDN, who replies with an IP address for the cache that will serve the movie without using the DNS system

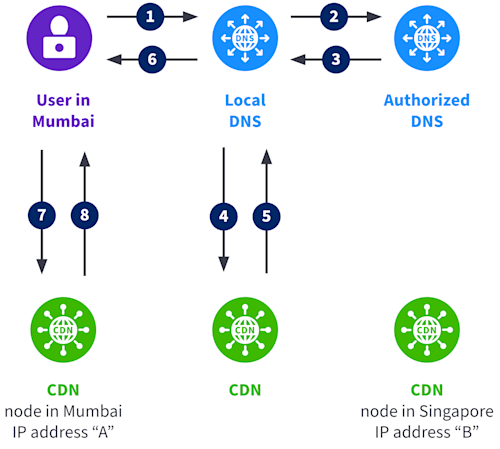

Most other CDNs map end users to a cache by mapping the DNS server the end user is using to request the content to a cache or a cache location.

The typical DNS-based flow for a content server by a CDN looks like this:

1. Where is site.com?

2. Where is site.com?

3. site.com is site.com.cdnsomething.com

4. Where is site.com.cdnsomething.com

5. site.com.cdnsomething.com is A for you

6. Site.com is A

7. Give me Site.com

8. Site.com

But how is mapping the DNS server to the cache or cache locations done? This is where the individual CDNs add their own magic.

The mapping can take several different parameters into account. For example:

- Latency: Measurements from the CDNs network, clients, and embedded caches create a latency map determining the closest cache for a given DNS server.

- Connectivity to the ISP: Is the cache embedded, reached by private or public peering or transit?

- Load: No CDN wants to direct a user to a cache that is already busy serving content, so the mapping takes the current load into account.

Note that this means that the mappings in the DNS system have quite a short TTL.

Some CDNs use anycast to direct the end user to the nearest cache. Announcements and withdrawal of the anycast prefixes from the caches in the ISP are then used for traffic management by the CDN.

How does end-user mapping affect the benefits of embedding?

What does the end-user mapping mean for the traffic flows internally in the ISP’s network in the case of more than one cache location? In the case of Open Connect and similar BGP-based mappings, the ISP has optimal control if the IP addresses used by the end users are regionalized. End users will then be served by the caches in that region and only use others in the case of failure or missing capacity. The fail-over caches can also be signaled with BGP, just like you would do with a primary and backup connection to your transit provider.

If the address plan is not regionalized, all customers should be announced to all caches. The geolocation of the end user’s IP address, and physical distance will determine where the end users are sent. This works well for geographically large networks but less so if you run an extensive network in a small area. In that case, it is challenging to prevent traffic from crisscrossing all over the network, and a better solution is to build a larger cache location in the center of the network.

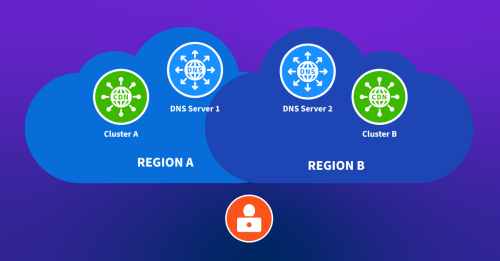

In the case of CDNs using DNS server mapping, the ISPs must run dedicated DNS servers for each region where they want to build a cache location.

Conclusion

Slow page loads and jittery audio and video repel viewers. Without CDN technology efficiently delivering internet content, we would be unlikely to be able to read, listen, and watch the wide variety of content that’s available online today – not without paying more for it or suffering from poor quality experiences. Embedding caches from the CDNs into your network can help you optimize the delivery and remove a lot of the strain of the large amounts of traffic. Kentik can help you analyze and understand the impact of CDN traffic on your network and whether embedding caches is for you. Please watch this Networking Field Day presentation by Steve Meuse, a Kentik solutions architect, for an overview of how Kentik can help you understand how OTT traffic and CDNs impact your network.