Summary

While AI offers powerful benefits for network operations, building an in-house AI solution presents major challenges, particularly around complex data engineering, staffing specialized roles, and maintaining models over time. The effort required to handle real-time telemetry, retrain models, and manage evolving environments is often too great for most IT teams. For enterprise networks, partnering with a vendor that specializes in AI and network operations is typically a more efficient, scalable, and sustainable approach.

Every organization across every industry is looking for ways to use AI to improve efficiency, gain deeper insights, or improve their operations. Network operations are no different. There’s immense potential in harnessing AI to predict, detect, and mitigate network issues before they escalate. However, as soon as we consider how to implement AI, we have to ask ourselves the age-old question: Should we build our solution in-house, or should we turn to a vendor partner?

For years, the build vs. buy debate was somewhat balanced for traditional software development, but the unique and complex nature of AI – especially in the context of NetOps – makes building an in-house solution an incredibly resource-intensive and challenging endeavor. For most enterprise network teams, buying or partnering is usually the better option.

The data problem

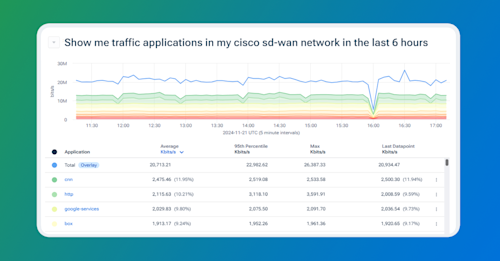

A major reason building an AI solution in-house is so challenging is dealing with the data needed for an AI initiative. Our networks generate a vast amount of telemetry data that we need to ingest, store, clean, transform, secure, and analyze. This includes traditional metrics like flow logs, SNMP, and streaming data from our beloved network appliances like routers, switches, and firewalls. It also includes unstructured data such as device configs, ticketing systems, and network diagrams, among others.

This requires serious data engineering, carefully chosen data platforms, and forward-thinking telemetry pipeline architecture.

With this collection of structured, unstructured, and semi-structured data, we end up with both a massive volume and a wide variety of data types to contend with. Add to this the real-time telemetry our networks generate, and we need a sophisticated system that can handle continuous ingestion at high velocity, which only makes the data pipeline that much more complex.

Data engineering for network telemetry is not just about storing huge volumes of logs, though. It also involves understanding how the data changes over time, integrating multiple data sources in a reliable, real-time manner, and designing systems that can keep pace with the constant flow of updates.

For example, telemetry data must be normalized to ensure it is understandable and comparable across different hardware vendors and software versions. Meanwhile, every datapoint must be secured from unauthorized access to safeguard both the organization’s confidential information and customer data. Finding data engineers who can design, implement, and maintain these complex pipelines, while also understanding networking, is a hurdle in itself.

Building a data pipeline is only the beginning. AI models also require continuous retraining, updating, and validation for accuracy, which necessitates a carefully engineered pipeline for data refreshes. For NetOps, it’s important to remember that a lot of the telemetry data we deal with is dynamic. As networks operate, new data flows in, latency calculations fluctuate, interface counters increment, network topologies change, and environments evolve. The AI models we use must be reevaluated regularly, especially if their outputs inform mission-critical network decisions.

If your solution is built entirely in-house, you bear sole responsibility for ensuring that the data pipeline adapts, the databases are updated, and the models are recalibrated whenever the environment changes. This is a permanent effort, not a one-time project.

The staffing problem

Staffing and skill sets also pose major challenges when attempting to build an AI solution. Traditional network engineers typically excel at configuring hardware and ensuring uptime, but they may lack the background in data science, machine learning, and big-data pipelines necessary to develop sophisticated AI solutions.

Hiring data scientists who understand the complexities of network operations and also possess the necessary mathematical, statistical, and programming skills can be incredibly difficult. In many IT organizations, there is a real shortage of individuals who are both comfortable with advanced AI/ML techniques and knowledgeable enough about networking to make sense of the data. On top of that, each data scientist typically requires at least one, if not several, data engineers. The data engineers you hire for this project will require specialized tools, software, and dedicated environments to work effectively, which will only impose additional infrastructure costs on your network team.

The model maintenance problem

Maintaining AI models after initial deployment is yet another hurdle. A newly created model might start off with excellent accuracy for network anomaly detection or capacity planning. However, as network usage patterns evolve—perhaps the organization’s user base grows or new applications place different demands on network resources—the performance of that AI model can change and degrade.

You’ll need ongoing processes and resources to monitor the model’s accuracy, detect model drift, and revalidate or retrain the model. Additionally, you’ll need to handle version control, track which model is deployed where, and measure performance metrics in real-time. This aspect of AI lifecycle management is difficult enough in data-centric industries, so you can imagine how challenging it would be in the unique, fast-paced world of network operations.

Build versus buy

For many network teams, it quickly becomes apparent that they lack the necessary bandwidth, resources, or in-house expertise to manage an entire AI lifecycle effectively. While the classical build vs. buy debate used to hinge on factors such as software licensing costs and customization speed, AI for network operations introduces a host of additional complexities.

Data engineering at scale, the continuous need for model retraining, the specialized skill sets, and the ever-changing nature of real-time telemetry data make it significantly more challenging to achieve a return on an in-house build.

Working with a dedicated partner that specializes in AI and ML can solve many of these problems, or more accurately, it can move the responsibility to a trusted partner. In this case, an expert partner can take responsibility for creating and managing the data pipelines, ensuring that data is ingested, normalized, and secured appropriately. They also bring stable platforms and domain expertise, which reduces the trial-and-error many network engineers undergo when experimenting with building a solution in-house. That means the AI implementation happens much sooner without the never-ending PoCs.

Since these types of providers focus on AI, including the expertise, staff, and infrastructure needed for an AI workflow, they are much better equipped to manage the entire model lifecycle from initial development to continual retraining. And especially important in network operations, they can handle evolving data over time. Rather than spending extensive resources spinning up or expanding your internal data science team, you can leverage the partner’s existing talent and infrastructure.

Admittedly, there can be a certain appeal to building your own AI system, especially if you want tight control or high levels of customization. Nevertheless, the complexity and scope of modern AI solutions for network operations will usually render in-house development too big of a lift for a typical networking team.

So, although building basic software solutions in-house may have once seemed a viable path for many network teams, today’s environment of complex data engineering, machine learning, and continuous model iteration has shifted the goal posts. Enterprise network teams will usually find the build approach to be too expensive, too time-consuming, and too risky. Partnering with a provider that’s dedicated to AI in network operations not only streamlines implementation and maintenance but also ensures that your AI workflows remain accurate, secure, and relevant as your network evolves.