AI Networking 101: How AI Runs Networks and Networks Run AI

AI is changing both how we build networks and how we run them. On one side, machine learning turns mountains of telemetry into instant answers—flagging anomalies, predicting incidents, and automating fixes. On the other, AI workloads themselves demand a new class of data center fabric: ultra-high-bandwidth, low-latency, loss-averse interconnects that keep thousands of GPUs in lockstep. “AI networking” is where these two realities meet.

This article gives an overview of both dimensions: AI for networking (automation, assurance, and security driven by AI/ML) and networking for AI (the architectures and technologies that power large-scale training and real-time inference). You’ll learn how modern observability and closed-loop automation reduce toil and MTTR, why job completion time (JCT) in AI training is often gated by the network, and what it takes to design non-blocking, any-to-any fabrics that avoid elephant-flow collisions and jitter.

About Kentik: Kentik helps teams succeed with AI networking in both directions: AI for networking and networking for AI. For operations, Kentik turns network telemetry (flows, routing, device and cloud metrics, logs, and synthetics) into answers and actions, including natural-language troubleshooting with Kentik AI Advisor for multi-step, auditable investigations that speed MTTR. For AI infrastructure, Kentik provides visibility into high-performance fabrics by surfacing issues like elephant flows, microbursts, loss hotspots, jitter, and path asymmetry that can slow distributed training and inference.

What is AI Networking?

AI networking refers to the convergence of artificial intelligence (AI) technologies with networking, encompassing two complementary concepts: Using AI to optimize and automate network operations (often termed “AI for networking”), and designing high-performance networks to support AI workloads (termed “networking for AI”).

In practice, AI networking means smarter, self-optimizing networks on one hand, and ultra-fast, scalable data center fabrics on the other. This dual perspective is shaping modern network infrastructure—from autonomous network management systems to specialized cluster interconnects that link thousands of AI processors in parallel.

AI for Networking: AI-Driven Network Operations

AI for networking involves applying AI and machine learning to monitor, manage, and secure networks automatically. Instead of static scripts or manual tweaks, AI-driven networks can analyze vast telemetry data, learn normal patterns, and respond to issues in real time. Key capabilities include:

-

Automated Network Management: AI systems ingest diverse network telemetry (device logs, flow records, routing updates, etc.) to detect anomalies and performance issues faster than human network operators. For example, machine learning models can spot unusual traffic spikes or latency jumps and pinpoint root causes across complex topologies.

This proactive analysis helps identify outages, misconfigurations, or security threats before they impact users. By converting raw data into insights, AI effectively becomes an expert “network analyst” on the team.

-

Self-Optimization: AI-enabled networks continuously learn and adjust. They can predict congestion or failures and automatically reconfigure routing and traffic flows to optimize performance.

For example, if an AI model foresees a link reaching capacity, the system might reroute some traffic or balance loads elsewhere, without waiting for human intervention. Such self-optimizing behavior keeps networks running smoothly even as conditions change.

-

Closed-Loop Automation: AI for networking enables closed-loop workflows where detection and remediation are tightly integrated. When an anomaly is detected, the system doesn’t just alert a human – it can trigger automated actions (with safety checks). This could mean automatically resetting a flapping interface, blackholing DDoS traffic, or adjusting QoS policies in response to detected congestion.

Over time, the AI learns which actions fix which issues, continually improving its recommendations. Networks thus become self-healing and require fewer manual fixes. A blog by network orchestration vendor Itential, described this as transforming AI/ML insights directly into orchestrated network actions, so networks adapt proactively to real-time conditions. See also Kentik’s work on real-time alerting and response in AI data centers.

-

Enhanced Security: AI and ML greatly bolster network security by analyzing traffic for threats in ways traditional network monitoring tools can’t. An AI-driven security system can sift through millions of log entries and flow records to find the needle-in-a-haystack signs of malware or intrusions – often faster and with fewer false positives than static rules. It learns baseline behaviors and flags anomalies (e.g., a sudden data exfiltration or a DDoS attack pattern) instantly. AI can also automatically enforce security policies. For example, blocking suspicious IPs or quarantining compromised devices in response to an alert.

This rapid, adaptive defense is crucial as networks face increasingly sophisticated cyberattacks. By reducing alert fatigue and accelerating incident response, AI-driven security keeps networks safer.

These capabilities make AI-driven networks far more efficient and reliable. An AI-powered network management platform effectively acts as a virtual engineer that never sleeps. It correlates data, predicts problems, and takes action in seconds, enabling a shift from reactive troubleshooting to proactive assurance. In industry terms, this aligns with AIOps. Vendors, including Kentik, broadly frame this as building toward autonomous, self-optimizing networks.

Learn how AI-powered insights help you predict issues, optimize performance, reduce costs, and enhance security.

Network Intelligence: The Advent of AI-assisted Network Monitoring and Observability

Network intelligence is a closely-related concept often used in the context of “AI networking”. It refers to an AI-assisted analytical layer on top of network observability data.

Instead of just showing raw metrics on dashboards, network intelligence solutions leverage AI/ML to fuse data from flows, routes, logs, cloud, and streaming telemetry, etc., and turn it into answers and actions. It correlates symptoms to probable causes, predicts risks (like an SLA breach or an impending device failure), and even recommends or triggers fixes.

In essence, network intelligence is what you get when you apply AI to networking data. The network becomes not only visible, but understandable and actionable. Kentik defines network intelligence as “an AI-assisted layer on top of network observability that turns raw telemetry (flows, routing, device and cloud metrics, logs, and synthetics) plus business context into answers and actions. It correlates and explains issues, predicts risk (like SLA breaches), and can trigger remediation across on-prem and multicloud networks.”

Natural Language Queries in AI Networking

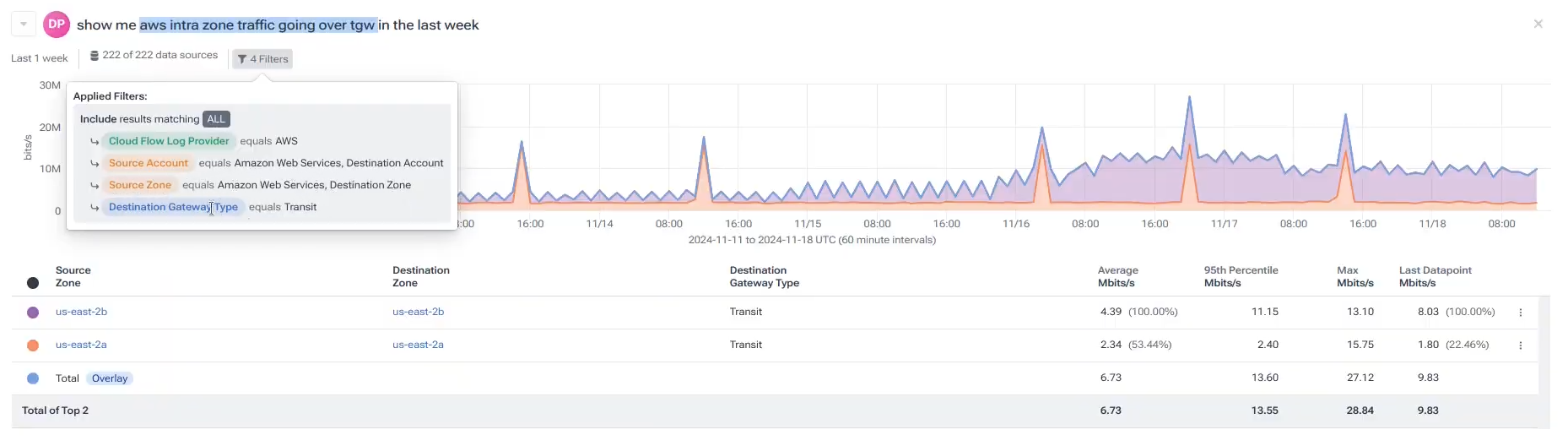

One powerful illustration of AI for networking is the rise of natural language interfaces for NetOps. Advances in LLMs mean engineers can ask questions about the network in natural language and get answers drawn from complex telemetry. Tools like Kentik AI let teams query performance and incidents across on-prem and cloud, returning answers or visualizations. Kentik AI Advisor and Cause Analysis help speed MTTR (mean-time-to-resolution) for network troubleshooting tasks while greatly simplifying access to network telemetry.

This brief video explains Kentik AI Advisor, a NetOps-focused AI that has a comprehensive understanding of enterprise networks, thinks critically, and advises how to design, operate, and protect network infrastructure at scale:

This video demonstration shows how Kentik AI enables NetOps teams to use natural language queries to identify costly traffic patterns in complex cloud environments:

For more examples and deeper explorations of LLM-assisted network troubleshooting, network monitoring, and network management, see our blog posts:

- Introducing Kentik AI Advisor: The Future of Network Intelligence

- Faster Network Troubleshooting with Kentik AI

- Using Kentik Journeys AI for Network Troubleshooting

- Troubleshooting Cloud Traffic Inefficiencies with Kentik AI.

Networking for AI: High-Performance Infrastructure for AI Workloads

Networking for AI focuses on the network infrastructure needed to support AI applications, especially in data centers running large-scale AI training or inference tasks. Modern AI workloads (such as training deep learning models like GPT5) are massively distributed. They run in parallel on hundreds or thousands of GPUs or specialized AI accelerators. This distributed computing paradigm creates unique and extreme demands on the network connecting those compute nodes. Some of the requirements of this relatively new network architecture are described below.

High Throughput and Low Latency

AI clusters must move huge volumes of data between nodes with minimal delay. During training of a neural network, for example, GPUs frequently exchange model parameters, gradients, and dataset shards. These exchanges happen every few milliseconds and involve gigabytes per second of data. Any network slowness negatively impacts AI performance. Traditional Ethernet networks with relatively high latency or oversubscription can become a bottleneck. Instead, AI fabrics use ultra-high bandwidth links (often 200 Gbps, 400 Gbps, or faster NICs) and low-latency protocols to keep the GPUs fed with data.

Technologies like NVIDIA’s InfiniBand or high-speed Ethernet with RDMA (Remote Direct Memory Access) are common to achieve the needed throughput and microsecond-level latencies. In fact, it’s estimated that in large-scale AI training deployments, over 50% of the job completion time (JCT) can be spent waiting on network communication, as opposed to pure computation.

This means that, in many cases, the network literally dominates how fast AI jobs finish. A slow link or congested switch can stall an entire training run. Networking for AI is all about removing these data transfer bottlenecks.

Synchronized, Any-to-Any Communication

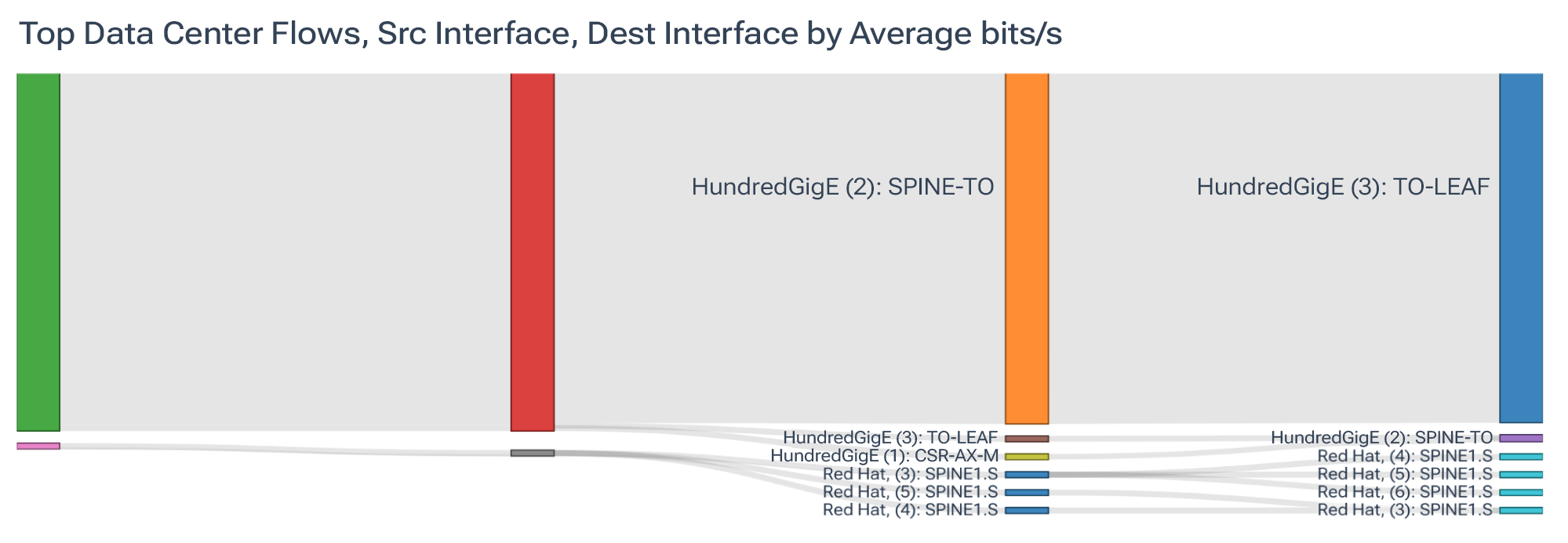

Unlike typical enterprise network traffic (which might be many independent, asynchronous flows like web requests or database queries), AI workloads involve highly synchronized, all-to-all data exchanges. For example, consider a training job spread across 1,000 GPUs. At certain intervals (say after computing gradients), every GPU may need to share its results with every other GPU (or a large subset) to average updates. This is often called an “all-reduce” operation in distributed training. The pattern is a dense mesh of communications, often orchestrated in “phases” where a group of GPUs sends to another group, etc.

The network must support this concurrent, high-volume mesh traffic without collisions. In networking terms, these are “elephant flows” – extremely large, long-lived flows – that occur simultaneously between many endpoints.

All GPUs in a pod might send data to all GPUs in another pod after each computation step. If one transfer is slow, it forces others to wait (since each node typically must receive all peers’ data before proceeding). This synchronized nature means the slowest link in the cluster can determine overall job speed. As a result, networking for AI demands a fabric where any node can talk to any other with consistently high performance (sometimes called any-to-any connectivity).

Topologies like non-blocking fat-trees or full‑bisection Clos networks are used to ensure no oversubscribed choke points. In practice, cluster network architects strive for a 1:1 subscription ratio (no oversubscription) so that the network can carry full traffic load from all GPUs without queuing. Non-blocking switch architectures and large cross-sectional bandwidth are a must.

Ultra-Low Jitter and Loss

AI communications are often sensitive not just to average bandwidth, but to jitter (variability in latency) and packet loss. Even tiny amounts of packet loss can dramatically degrade AI application performance due to the synchronous nature. Lost packets introduce retransmission delays that stall synchronized operations. As a result, networking for AI pushes toward lossless or near-lossless transport, often using techniques like flow control (e.g., credit-based schemes in InfiniBand or Ethernet PFC) to avoid drops.

Similarly, consistent latency is valued over dynamic routing changes. Out-of-order arrivals or fluctuating delays can disrupt the tightly coordinated computations. This is one reason that specialized interconnects like InfiniBand have been popular in supercomputing and AI. They provide hardware-level reliability and consistent latency.

However, Ethernet is also evolving to meet AI needs (with standards like RoCE – RDMA over Converged Ethernet – and efforts to reduce jitter). The Ultra Ethernet Consortium, an industry group that includes major network vendors and hyperscalers, is even working on new Ethernet-based standards tailored for AI/ML workloads’ performance requirements.

Network Architecture for AI

Given these demands, traditional data center networks aren’t sufficient for AI at scale. Legacy architectures might have 3:1 or 5:1 oversubscription and tolerate some congestion or latency, which is fine for web applications but disastrous for AI training. As a result, we’re seeing a new class of AI-specific network designs emerge:

AI Cluster Fabrics

Sometimes called AI interconnects, these are high-performance network fabrics purpose-built for AI clusters. They aim to provide non-blocking, low-latency, high-bandwidth connectivity at massive scale. Key requirements often cited include those we’ve already mentioned: no oversubscription (1:1), minimal switch hop latency, and effective congestion elimination.

To achieve this, engineers employ techniques like CLOS fabrics (multi-stage networks with enough bandwidth in each stage) and even experimental approaches like optical interconnects or express topology where AI nodes are connected in flattened networks to reduce latency. One technique is scale-out Ethernet with multipathing: using many parallel paths and spreading traffic across them.

Standard ECMP (equal-cost multi-path) load-balancing isn’t optimal for elephant flows because it tends to pin each flow to a single path, which could overload that path. Newer approaches like packet spraying distribute packets of a single flow across multiple paths simultaneously, to better utilize all links and avoid any one flow hogging a single path.

There are also scheduled fabrics under research, where a centralized controller coordinates when large flows send traffic to prevent any two elephant flows from colliding on the same link – essentially orchestrating network traffic like a train timetable to guarantee no congestion. These ideas are quite cutting-edge, even in 2025, and demonstrate how networking for AI sometimes resembles managing an HPC (high-performance computing) interconnect more than a traditional Ethernet LAN.

Advanced Networking Technologies in AI Data Centers

Many advanced technologies are being adopted to meet AI needs, including:

-

Remote Direct Memory Access (RDMA) is one important example. RDMA allows data to move directly between the memory of two computers without involving their CPUs. By bypassing the operating system and CPU, RDMA drastically reduces latency and CPU overhead for network transfers.

In AI clusters, RDMA (over InfiniBand or even over Ethernet) enables fast, efficient shuffling of data between GPUs, which is critical during all-reduce operations, parameter server updates, and other training operations.

-

Adaptive routing is another key feature: switches that can dynamically reroute traffic on the fly based on congestion. Traditional networks use static routing or load-balancing, which can’t react if one path becomes hot. Adaptive routing senses congestion and diverts flows to alternate paths in real time, helping prevent queues from building. Many InfiniBand implementations and some advanced Ethernet switches support this type of routing. Adaptive routing ensures that no single congested link slows the job and that traffic is spread to wherever there is headroom.

Other innovations include shallow-buffer, high-radix switches (to minimize queuing delay), and new transport protocols optimized for AI. Even physical infrastructure is a consideration: AI clusters might require expensive active optical cables or novel cabling layouts to handle the bandwidth, and must deal with significant challenges in power and cooling due to the intense data throughput.

Learn more about the latest in AI data center architecture in this episode of the Telemetry Now podcast. Host Phil Gervasi talks to Arista’s Vijay Vusirikala about why job completion time, optical interconnects, power efficiency and observability are mission-critical in AI data center networking:

Networking for AI is about building fast, fat, and smart pipes between AI compute nodes. A well-designed AI network will allow a distributed training job to run almost as if all the GPUs were in one machine. When done right, adding more GPUs to a cluster yields nearly linear speed-ups in training. And the network can keep up with the scaling. If done poorly, diminishing returns appear quickly, as more GPUs just spend time waiting on data.

This is why cloud providers and enterprises investing in AI are also investing heavily in their network fabric. We see, for instance, specialized AI supercomputers (like NVIDIA’s DGX SuperPOD or Google’s TPU pods) with fully non-blocking fabrics and even custom networking units to maximize training throughput.

The Role of Monitoring and Observability in AI Networking

Monitoring and managing AI training and inference networks is itself a challenge. Thousands of high-speed flows, microbursts of traffic, and stringent performance targets make for an incredibly complex network environment. So telemetry and observability are critical.

Operators need real-time visibility into things like per-link utilization, congestion events, and end-to-end latency. A single congested port could slow an entire AI job, so pinpointing such issues quickly is vital. AI can help here as well, by digesting the deluge of telemetry from an AI fabric and highlighting anomalies or optimizations. That is, we can apply AI for networking within an AI cluster network itself.

Benefits and Use Cases of AI Networking

AI networking, both AI-for-networking and networking-for-AI, yields significant benefits for organizations, including:

-

Greater Automation and Agility: Networks infused with AI can handle many tasks autonomously, from troubleshooting to tuning. This reduces the load on NetOps teams and speeds up response times dramatically. For example, an AI-driven network monitoring system might detect a pattern of intermittent packet loss and automatically pinpoint it to a flapping router interface, opening a ticket or even initiating a failover. This sort of agility is crucial as networks grow in scale and complexity, beyond what manual methods can manage.

-

Improved Performance and Reliability: Both aspects of AI networking aim to maximize performance. AI optimizations in network ops lead to higher uptime and fewer performance degradations (since issues are fixed or mitigated faster). Meanwhile, high-performance AI fabrics ensure that critical AI applications (like voice assistants, real-time analytics, or large model training) run as efficiently as possible.

In business terms, this can mean faster innovation (AI model training completes sooner) and better user experiences (applications are more responsive). A well-known metric in AI training is Job Completion Time (JCT), and AI networking improvements have a direct impact on lowering JCT by eliminating network-induced delays.

-

Enhanced Security Posture: By integrating AI, networks become more adept at handling security threats. Machine learning-based NDR (Network Detection and Response) systems, for example, can catch never-before-seen attack patterns by recognizing anomalous behaviors, in cases that signature-based systems might miss. AI can also react faster, containing a threat in seconds. This speed and intelligence are increasingly important as attacks become more sophisticated.

-

New Operational Insights: AI networking surfaces insights that were previously hard to see. Natural language querying of network data is one example. It democratizes access to information about the network. A help desk technician could ask, “Is anything wrong with the network path between our Chicago office and Salesforce today?” and get a meaningful answer without escalating to a network specialist. AI can also correlate network data with business data (e.g., linking a spike in network latency to a drop in transaction throughput on an e-commerce app), offering a more holistic understanding of how network performance affects business outcomes. This improves the network team’s ability to contribute to broader business intelligence.

-

Supporting AI/ML Initiatives: On the infrastructure side, robust networking for AI means organizations can fully leverage AI/ML initiatives. Data scientists and AI engineers can scale their experiments to more GPUs or distributed environments without worrying that the network will be a limiting factor. This improved efficiency is crucial for tasks like training large language models or doing real-time ML inference across a cluster. Essentially, the network will not be the reason an AI project fails to meet its speed or scale goals. Companies building AI products benefit from networks that allow seamless horizontal scaling of AI workloads.

Challenges in AI Networking

Despite the many benefits, it’s important to acknowledge challenges in implementing AI networking:

On the AI-for-netops side, one challenge is trust and governance. Network engineers may be cautious about letting an “AI” make changes to critical infrastructure. If an AI system misidentifies a normal traffic surge as an attack and shuts down a link, for example, it could do harm. Therefore, organizations need robust governance including humans in the loop for approvals, and transparency into how the AI makes decisions.

AI networking vendors are addressing these challenges by providing explainable AI outputs and the ability to set policies on automated actions. Another challenge is data quality and integration. AI models are only as good as the data they train on. Pulling in real-time telemetry from multi-vendor, multi-cloud environments can be complex. Ensuring the AI has access to all relevant data (and that it’s normalized and accurate) requires effort.

There’s also the issue of skill gaps. Networking teams may need to learn new tools or basics of data science to fully take advantage of AI features. Many organizations are investing in training or hiring for these cross-disciplinary skills.

On the networking-for-AI side, challenges include the cost and complexity of building such high-end networks. Low-latency, high-bandwidth gear such as specialized switches, NICs, and cabling can be very expensive. Designing a non-blocking fabric for thousands of nodes is a major engineering project.

There’s also a rapid evolution in standards. Companies must bet on the right technologies (e.g., InfiniBand vs. Ethernet improvements). Managing and troubleshooting the AI fabric can be difficult due to the sheer scale and the need for extremely granular telemetry.

Even power and cooling become issues when you have dense clusters pumping 400 Gbps through dozens of cables – the infrastructure around the network (data center cooling, rack design) may itself need upgrades. But given the strategic importance of AI, many organizations find these investments justified, and they mitigate complexity by leveraging reference architectures or cloud services.

How Can Kentik Help with AI Networking?

AI networking has two sides: using AI to operate networks better, and engineering networks that power AI at scale. Kentik enables both: turning heterogeneous telemetry into answers and actions for NetOps, while giving AI infrastructure teams the visibility to keep GPU fabrics fast, loss‑averse, and predictable.

Operate Smarter with Kentik AI for Networking

Kentik AI brings the benefits of network intelligence to NetOps teams:

- Natural‑language troubleshooting: Use natural‑language operations to query flows, routing, device, cloud, and synthetics and get immediate explanations and visualizations. See MTTR gains with faster network troubleshooting and democratized telemetry with Kentik Journeys. Most recently, Kentik introduced Kentik AI Advisor, a powerful new AI designed to deeply understand your network, reason through complex issues, and deliver clear, actionable guidance for designing, operating, and protecting your networks.

- Unified network intelligence: Build end‑to‑end network intelligence. Visualize all cloud and network traffic, validate paths with synthetic monitoring, and monitor devices with Kentik NMS.

- From data to decisions: Move beyond dashboards with AI‑assisted insights that correlate symptoms to causes and recommend next steps with solutions like Cause Analysis.

Build and Run Faster Fabrics with Kentik’s Networking for AI Solutions

Kentik AI helps build, maintain, and speed today’s high-performance AI data center networks:

- Quantify network impact on training: Measure the network’s share of job completion time (JCT) and target fixes that shorten training cycles.

- Eliminate performance killers: Detect synchronized elephant flows, microbursts, loss hotspots, and path asymmetry before they stall all‑reduce phases. And pair those findings with real‑time alerting to protect throughput.

- Plan capacity and topology: Use traffic evidence to validate 1:1 fabrics and prioritize upgrades where they deliver the biggest JCT wins—see The Evolution of Data Center Networking for AI Workloads.

- Optimize hybrid AI paths and cost: Evaluate egress, latency, and routing for cross‑region and multicloud AI pipelines with Cloud Pathfinder. Kentik AI can help improve production inference across clouds, with AI troubleshooting features.

Get started with Kentik: Start a free trial or request a personalized demo today.