Summary

On July 8, 2022, Canadian telecommunications giant Rogers Communications suffered a catastrophic outage taking down nearly all services for its 11 million customers in the largest internet outage in Canadian history. We dig into the outage and debunk the notion that it was caused by the withdrawal of BGP routes.

Beginning at 8:44 UTC (4:44am EDT) on July 8, 2022, Canadian telecommunications giant Rogers Communications suffered a catastrophic outage taking down nearly all services for its 11 million customers in what is arguably the largest internet outage in Canadian history. Internet services began to return after 15 hours of downtime and were still being restored throughout the following day.

Initial analyses of the outage reported that Rogers (AS812) couldn’t communicate with the internet because its BGP routes had been withdrawn from the global routing table. While a majority of AS812’s routes were withdrawn, there were hundreds of IPv4 and IPv6 routes that continued to be announced but stopped carrying traffic nonetheless. This fact points more to an internal breakdown in Rogers’ network such as its interior gateway protocol (IGP) rather than their exterior gateway protocol (i.e. BGP).

An effective BGP configuration is pivotal to controlling your organization’s destiny on the internet. Learn the basics and evolution of BGP.

The view from Kentik

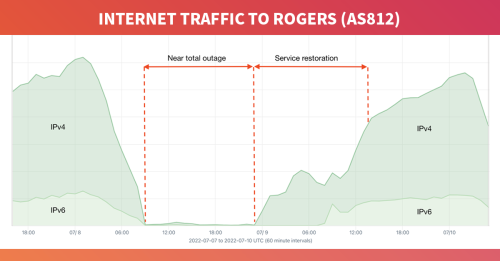

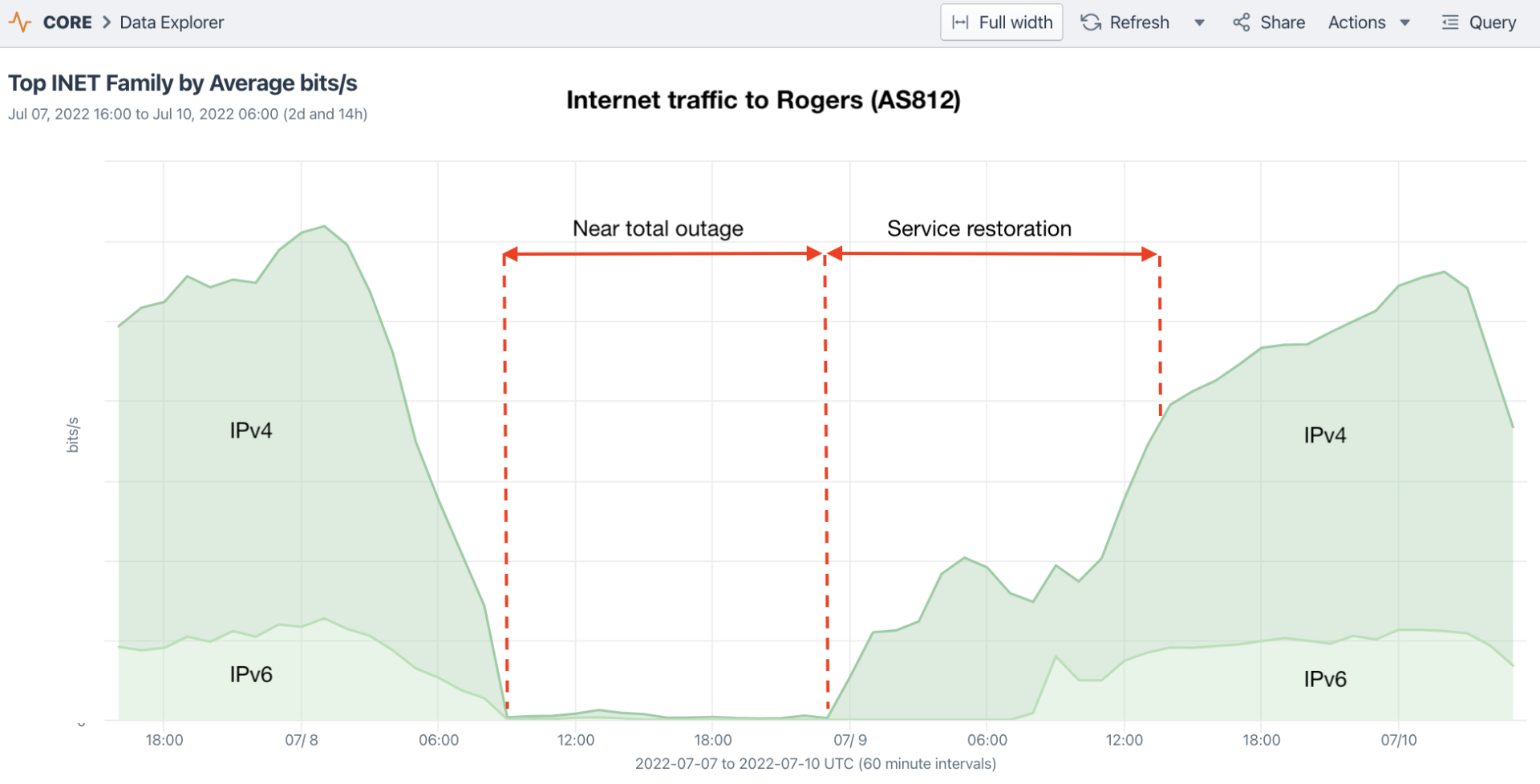

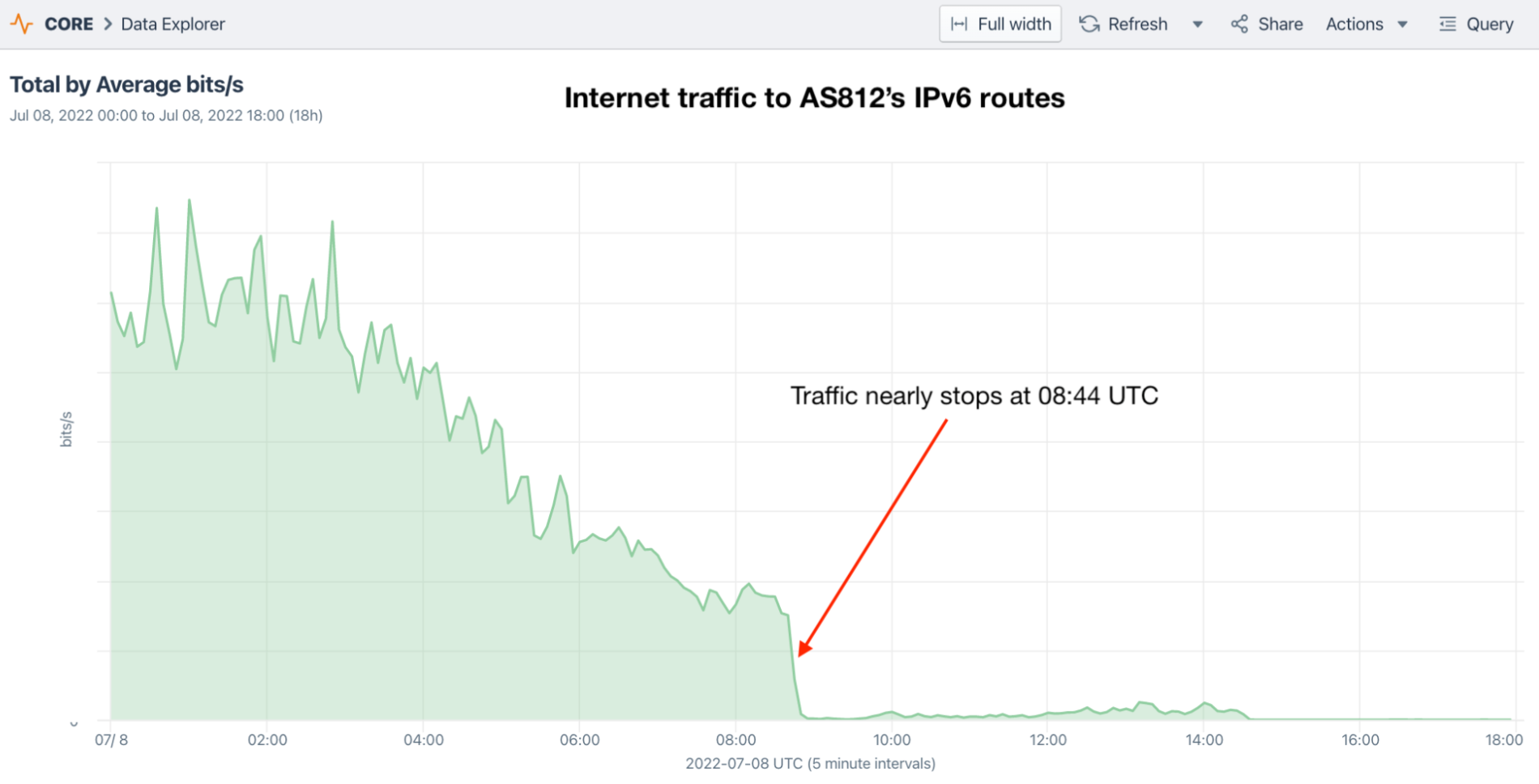

The graphic below shows Kentik’s view of the outage through our aggregated NetFlow data. Traffic drops to near-zero at 8:44 UTC and doesn’t begin to return until after midnight UTC. At 00:05 UTC, IPv4 traffic began flowing again to AS812 but IPv6 didn’t return until 08:30 UTC on July 9 for a total downtime no less than 15 hours. In all, it took several hours more until traffic levels were close to normal.

Route withdrawals

On a normal day, AS812 originates around 970 IPv4 and IPv6 prefixes that are seen by at least 100 Routeviews vantage points. During the outage, hundreds of these routes were withdrawn — but not all at the same time. While most of the withdrawn routes went down around 8:44 UTC — the same time that traffic stopped flowing to AS812. There were also batches of route withdrawals at 8:50, 8:54, 8:59, 9:10 and 9:25.

On the day of the outage, a widely shared Reddit post featured a Youtube video of a BGPlay animation of the withdrawal of one of AS812’s routes: 99.247.0.0/18. Below is a reachability visualization of this prefix over the 24-hour period containing the outage. I believe it paints a clearer picture of the plight of this particular prefix: timing of its initial withdrawal, a temporary restoration, and eventual return.

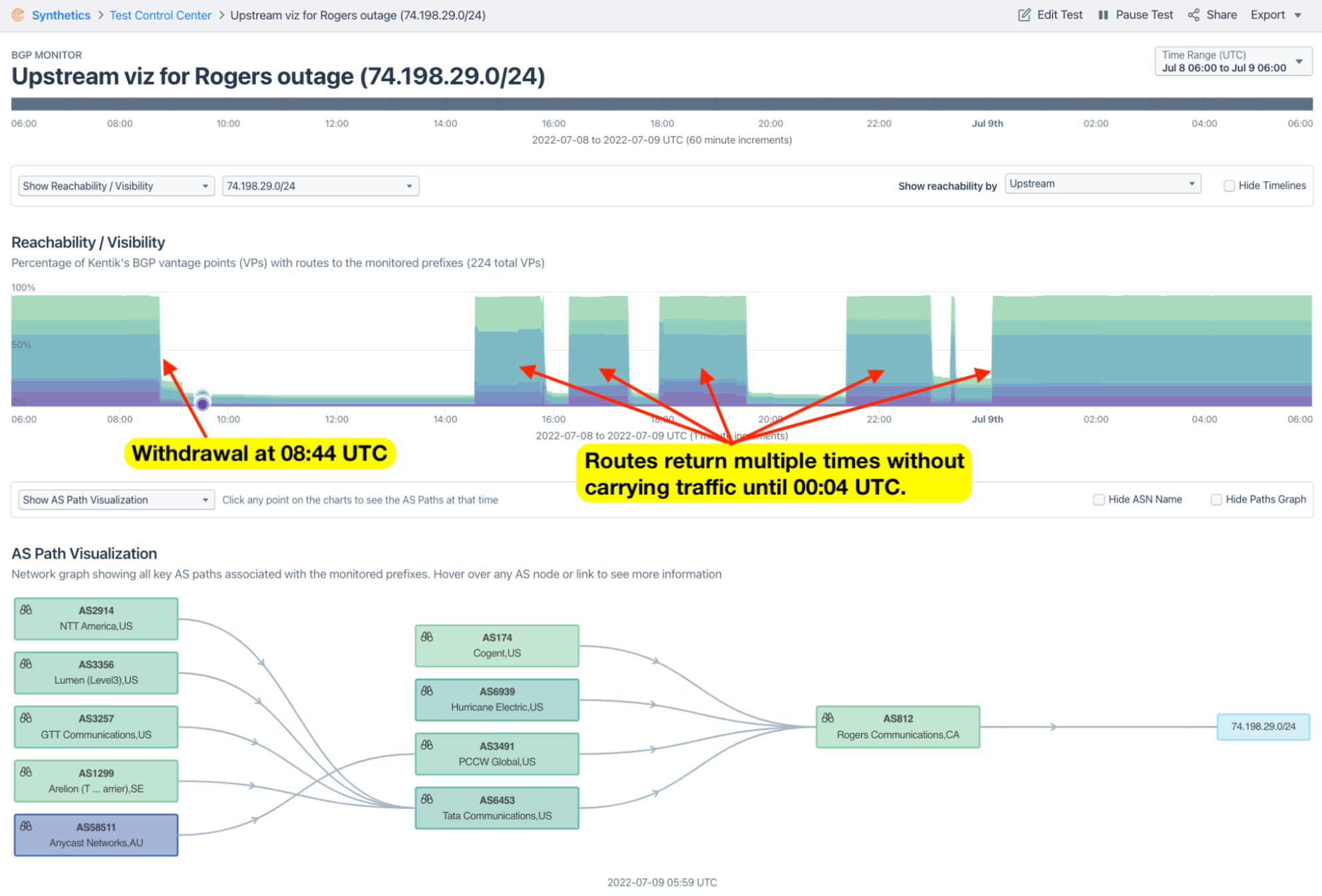

As was the case with 99.247.0.0/18, most of the withdrawn routes returned to the global routing table for periods of an hour or more beginning at 14:33 UTC (see reachability visualization for 74.198.29.0/24 below). Despite the temporary return of these routes, almost no traffic moved into or out of the AS812, meaning that it wasn’t the lack of reachability that was causing the outage.

Routes that stayed up

An interesting feature of this outage is the fact that the BGP routes that stayed up stopped carrying traffic. At 10:00 UTC on July 8 (over an hour since the outage began), about 240 IPv4 routes were still globally routed such as 74.198.65.0/24 illustrated below.

Additionally, at 10:00 UTC, there were about 120 IPv6 routes still being originated by AS812 and seen by at least 100 Routeviews vantage points. Most of these didn’t get withdrawn until 17:11 UTC — more than eight hours into the outage. The reachability visualization of one of those routes (2605:8d80:4c0::/44) is shown below.

If we isolate the traffic seen in our aggregate NetFlow to these 120 IPv6 holdouts, we can see traffic drop away at 08:44 UTC despite the fact that the routes are still in circulation for several more hours. Therefore the drop in traffic was not caused by a lack of reachability in BGP.

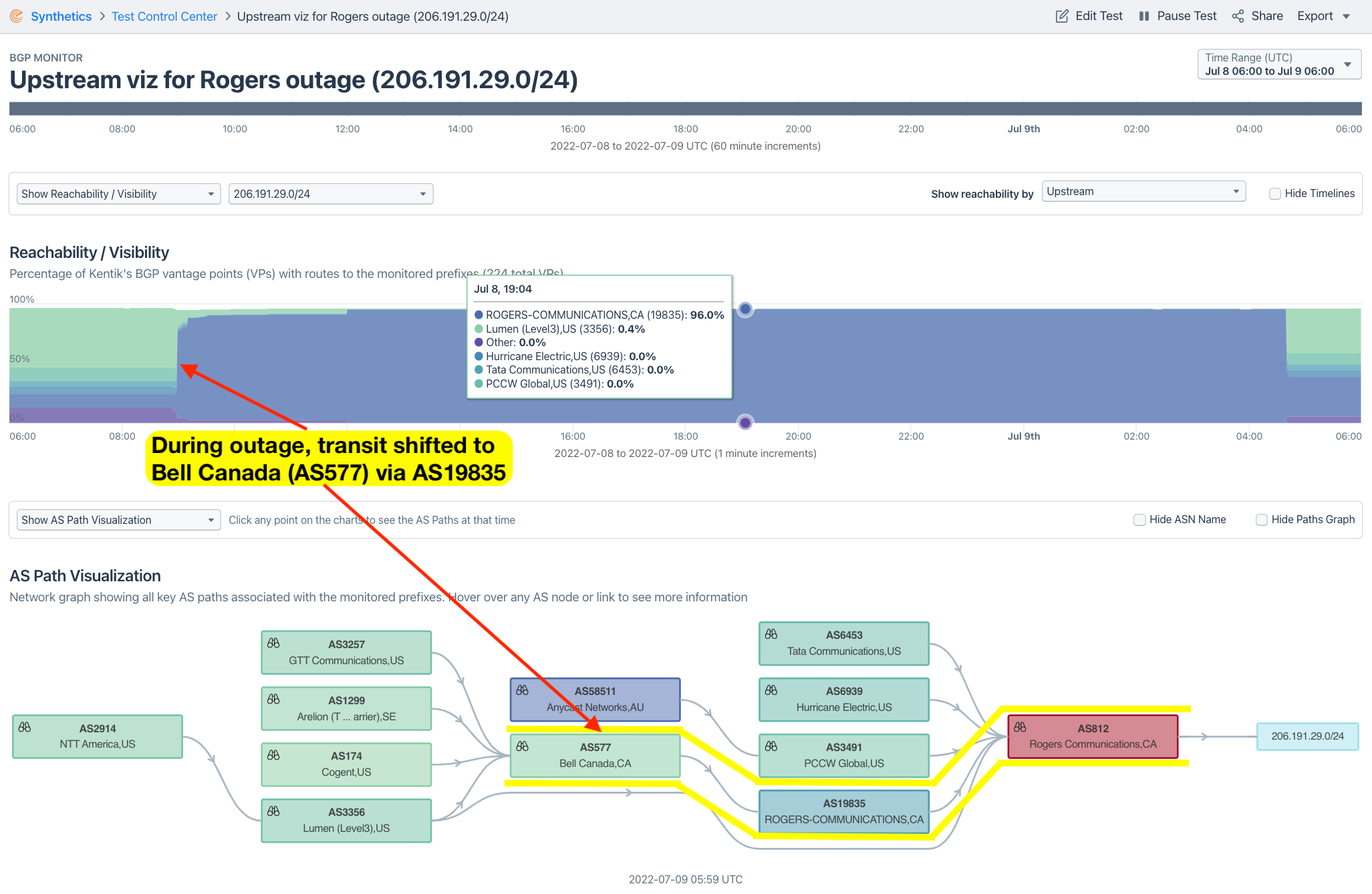

Finally, while it didn’t seem to do much to assuage the impact of the outage, there were also dozens of routes originated by AS812 that stayed online. They continued to carry traffic because they were re-routed through competitors such as Bell Canada (AS577), Beanfield Technologies (AS23498) and Telus (AS852). These routes transited another Rogers ASN, AS19835, to reach those providers as illustrated in the graphic below.

Don’t blame BGP :-)

In October, Facebook suffered a historic outage when their automation software mistakenly withdrew the anycasted BGP routes handling its authoritative DNS rendering its services unusable. Last month, Cloudflare suffered a 30-minute outage when they pushed a configuration mistake in their automation software which also caused BGP routes to be withdrawn. From these and other recent episodes, one might conclude that BGP is an irredeemable mess.

But I’m here to say that BGP gets a bad rap during big outages. It’s an important protocol that governs the movement of traffic through the internet. It’s also one that every internet measurement analyst observes and analyzes. When there’s a big outage, we can often see the impacts in BGP data, but often these are the symptoms, not the cause. If you mistakenly tell your routers to withdraw your BGP routes, and they comply, that’s not BGP’s fault.

However in the case of the Rogers outage, the fact that traffic stopped for many routes that were still being advertised suggests the issue wasn’t a lack of reachability caused by AS812 not advertising its presence in the global routing table. In other words, the exterior BGP withdrawals were symptoms, not causes of this outage.

Lastly, the fact that users of Rogers’ Fido mobile service reported having no bars of service during the outage is also a head-scratcher — what is a common dependency that would take down the mobile signal along with internal routing?

We hope the engineers at Rogers can quickly publish a thorough root cause analysis (RCA) to help the internet community learn from this complex outage. As a model, they should look to Cloudflare’s most recent RCA which was both informative and educational.

In the meantime, we remain religious about the value of network observability to understand the health of one’s network and remediate issues when they occur. To learn more, contact us for a live demo.