AI Is Bigger Than LLMs: Why Network Teams Need to Think Beyond Chatbots and Agents

Summary

AI in network operations is more than chatbots and agents. LLMs make AI easier to use, but the real value comes from the underlying system of telemetry, data pipelines, analytics, ML models, domain knowledge, and workflows that help engineers reason, predict, and act. When designed thoughtfully, AI doesn’t replace engineers. Instead, it augments their expertise and reduces cognitive load across complex network operations.

Over the past couple of years, the term “AI” has become almost synonymous with large language models, chat interfaces, and, more recently, AI agents. If you spend any time on LinkedIn or at industry events, it would be easy to conclude that AI is ChatGPT, or that the future of operations is a fleet of autonomous agents clicking buttons on our behalf.

Yes, those technologies are exciting. I use them daily, and I’m excited to see how network operations will change and how they will benefit.

However, though LLMs, chatbots, and AI agents are absolutely part of the AI story, they aren’t the whole story.

One of the most critical mindset shifts network and infrastructure teams need to make right now is that AI is not a single technology. It’s a family of techniques, models, and workflows that add intelligence, prediction, and automation to processes. LLMs and agents are just the newest (and currently the loudest) members of that family.

If we reduce AI to “the chatbot era,” we risk missing the deeper, more durable value AI has already been delivering in network operations for years.

AI didn’t start with LLMs

Long before anyone typed a prompt into a chat window to create a silly poem, AI was already quietly at work in network operations.

- Statistical models were used to forecast traffic growth and capacity needs.

- Anomaly detection algorithms were identifying deviations from baseline behavior.

- Classification models were used to tag traffic, applications, and flows.

- Clustering techniques were used to group similar incidents and behaviors.

- Rule-based expert systems were encoding domain knowledge into deterministic workflows.

None of these looked like “AI agents,” but all of them added intelligence beyond static dashboards and manual analysis. They helped systems reason about data, even if that reasoning wasn’t expressed in natural language.

And really, that’s an important distinction. AI isn’t defined by how it talks to us. It’s defined by how it augments an engineer’s decision-making.

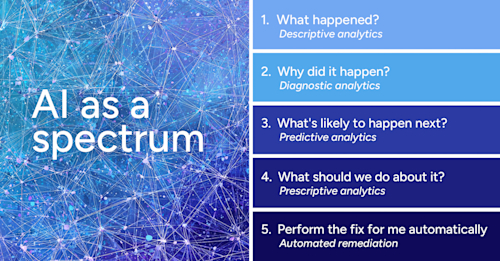

AI as a spectrum

A different (and a better) way to think about AI is as a spectrum of capabilities that build on one another. For example, we can use a variety of AI tools to answer these kinds of NetOps questions and perform these kinds of tasks:

-

What happened? (Descriptive analytics)

-

Why did it happen? (Diagnostic analytics)

-

What’s likely to happen next? (Predictive analytics)

-

What should we do about it? (Prescriptive analytics)

-

Perform the fix for me automatically, within guardrails (Automated remediation)

Machine learning models, statistical methods, heuristics, and domain logic live across this spectrum. LLMs primarily operate at the interface and reasoning layers, turning human intent into structured queries, explanations, or workflows. They don’t replace the underlying models that actually analyze telemetry or detect anomalies. They orchestrate them. In other words, LLMs are often the conductor, not the orchestra.

That doesn’t diminish their role, though. In fact, with an LLM, a sophisticated AI system can understand a human engineer’s intent, develop a workflow, and determine which tools to use. And those tools can be any number of complex or straightforward AI capabilities we just discussed, such as a predictive model, a clustering algorithm, and so on.

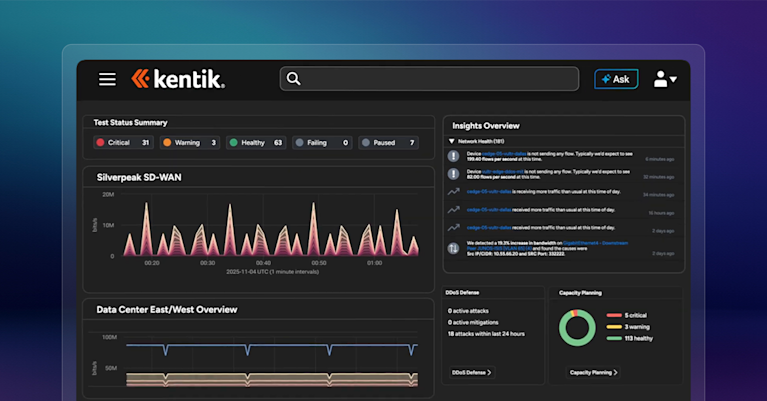

Learn how AI-powered insights help you predict issues, optimize performance, reduce costs, and enhance security.

Why this matters for network operations

Networks are complex, dynamic systems. They generate massive volumes of telemetry such as flows, metrics, and logs, and they contain vast amounts of data like configuration files, tickets, knowledgebase articles, and more. Making sense of that data at scale has always required intelligence beyond simple thresholds.

That’s why network observability platforms have leaned on ML for years to baseline behavior, reduce noise, and surface what actually matters. You see, what’s new isn’t the need for AI. We’ve been doing that for a while, using different terms for it. What’s new is how we interact with it.

LLMs make AI more accessible. They allow engineers to ask better questions, faster. They lower the barrier to insight. They vastly improve the ability to quickly create workflows to solve real problems.

But the quality of the answers still depends on the models performing the analysis, the data they analyze, the context that defines what “normal” and “important” mean, and the workflows that turn insight into action.

Without those foundations, an LLM is just a very confident narrator.

Agents are not magic either

AI agents are another powerful but often misunderstood piece of the puzzle.

An agent is not intelligent by itself. An agent is a control loop: observe, plan, act, evaluate. What makes an agent useful is the quality of the tools, data, and constraints it operates with.

In network operations, that means agents should:

- Call deterministic analytics tools (queries, baselines, models)

- Follow validated operational workflows

- Respect guardrails and policies

- Keep humans in the loop for high-risk actions

Agents shine when they automate well-understood processes. They struggle when asked to invent expertise on the fly. Again, the intelligence comes from the system design, not the buzzword.

AI is a system

One of the mistakes I see organizations make is treating AI as a bolt-on feature: “Add an LLM here” or “Turn on agents there.” That rarely works. Effective AI in operations is systemic and combines high-quality telemetry, sophisticated data pipelines, proven analytics and ML models, encoded domain knowledge, pre-built automation frameworks, human-centered interfaces, and the feedback loops necessary for learning and improvement.

When those pieces come together, you get something powerful in the form of a system that doesn’t just show data, but helps engineers think. And that’s the real promise of AI in network operations, not replacing engineers, but augmenting them to scale expertise and reduce cognitive load.

The road ahead

Let me be clear: LLMs and agents deserve the attention they’re getting. They’re transformative technologies. But they are accelerators, not replacements, for everything that came before.

If you’re building or evaluating AI-driven operational systems, ask deeper questions, such as “What intelligence exists beneath the interface?”, “How does the system reason about my network specifically?”, and “What workflows are being automated?”

AI is not just a chatbot. It’s not just an LLM, and it’s not just an agent. It’s a layered, evolving set of technologies that, when designed thoughtfully in a system, can make complex systems like networks easier to operate, understand, and trust.