Summary

Last week a major internet outage took out one of Australia’s biggest telecoms. In a statement out yesterday, Optus blames the hours-long outage, which left millions of Aussies without telephone and internet, on a route leak from a sibling company. In this post, we discuss the outage and how it compares to the historic outage suffered by Canadian telecom Rogers in July 2022.

In the early hours of Wednesday, November 8, Australians woke up to find one of their major telecoms completely down. Internet and mobile provider Optus had suffered a catastrophic outage beginning at 17:04 UTC on November 7 or 4:04 am the following day in Sydney. It wouldn’t fully recover until hours later, leaving millions of Australians without telephone and internet connectivity.

On Monday, November 13, Optus released a statement about the outage blaming “routing information from an international peering network.” In this post, I will discuss this statement as well as review what we could observe from the outside. In short, from what we’ve seen so far, the Optus outage has parallels to the catastrophic outage suffered by Canadian telecom Rogers in July 2022.

Analyzing the outage from afar

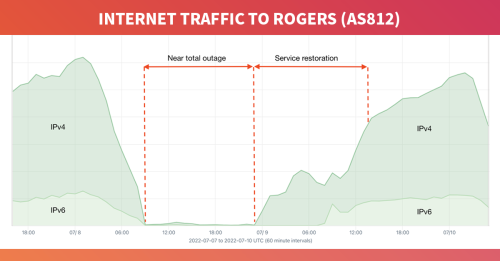

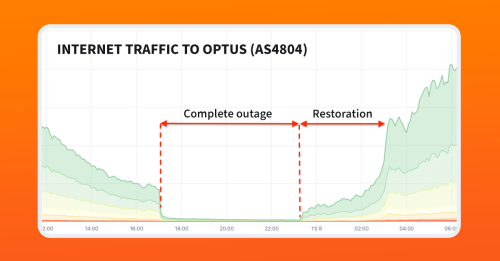

Let’s start with the overall picture of what the outage looked like in terms of traffic volume using Kentik’s aggregate NetFlow. As depicted in the diagram below, we observed traffic to and from Optus’s network go to almost zero beginning at 17:04 UTC on November 7 or 4:04 am on November 8 in Sydney, Australia.

Traffic began to return at 23:22 UTC (10:22 am in Sydney) following a complete outage that lasted over 6 hours. Pictured below, the restoration was phased, beginning with service in the east and working westward until it was fully recovered over three hours later.

As stated in the introduction, there are notable parallels between this outage and the Rogers outage of July 2022. The first of which is the role of BGP in the outage.

An effective BGP configuration is pivotal to controlling your organization’s destiny on the internet. Learn the basics and evolution of BGP.

In the case of Rogers, the outage was triggered when an internal route filter was removed, allowing the global routing table to be leaked into Rogers’s internal routing table. This development overwhelmed the internal routers within Rogers, causing them to stop passing traffic. Rogers also stopped announcing most, but not all, of the 900+ BGP routes it normally originated.

In the case of last week’s outage, Optus’s AS4804 also withdrew most, but not all, of its routes from the global routing table at the time of the outage.

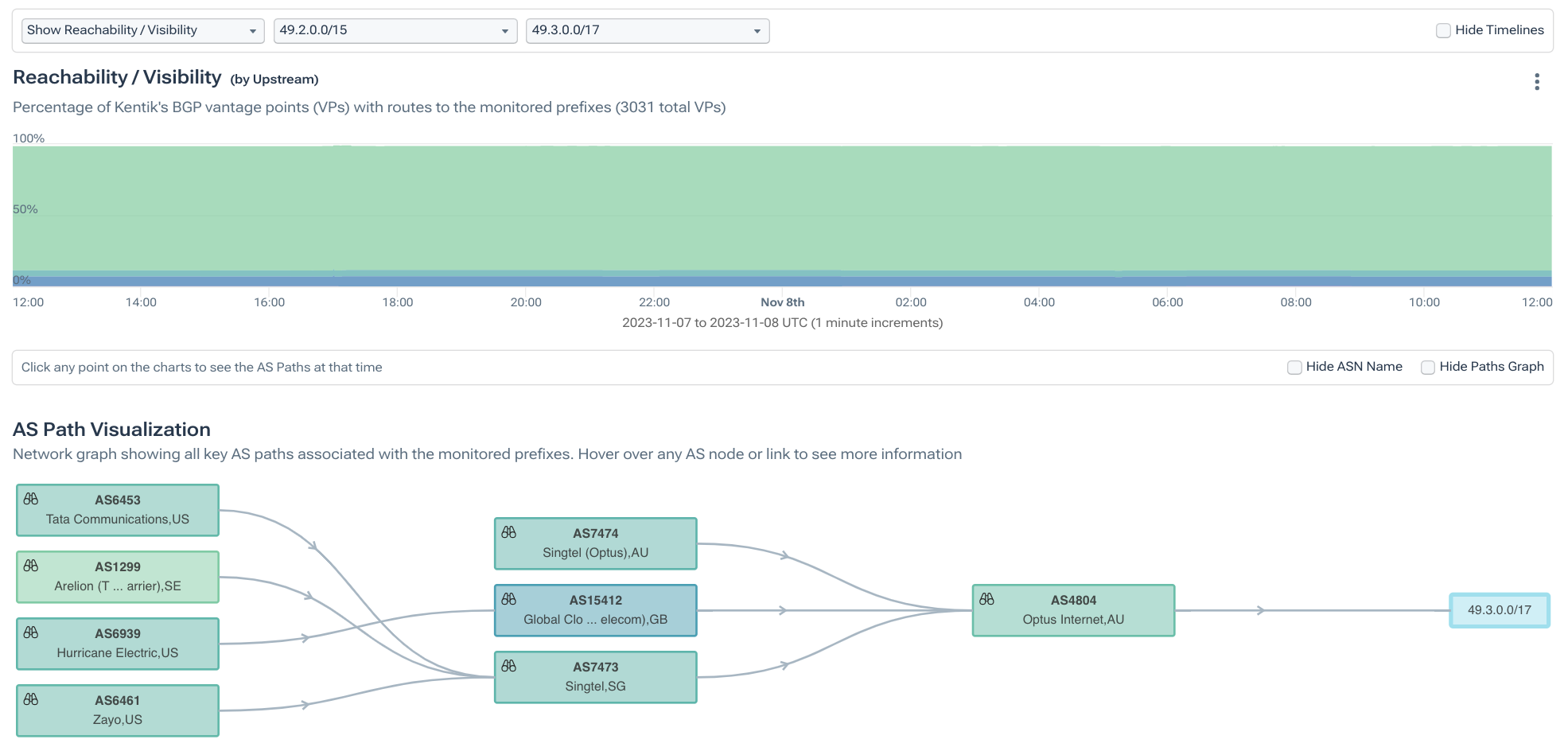

As an example, below is a visualization of an Optus prefix (49.2.0.0/15) that was withdrawn at 17:04 UTC and returned at 23:20 UTC later that day.

However, this didn’t render all of that IP space unreachable as more-specifics such as 49.3.0.0/17 were not withdrawn during the outage, as is illustrated in the visualization below.

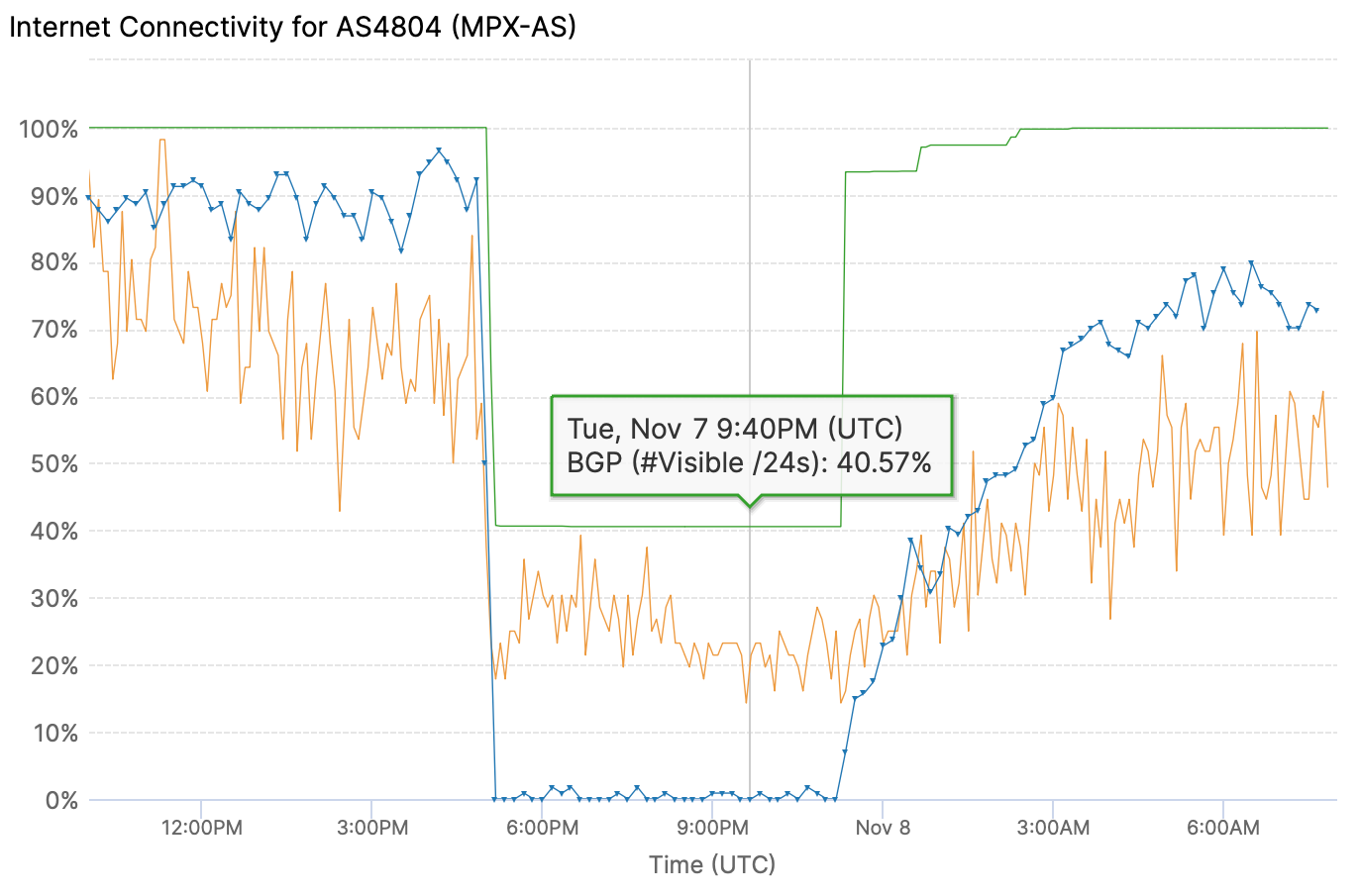

In fact, the IODA tool from Georgia Tech captures this aspect of the Optus outage well. Pictured below, IODA estimates (in the green line) that AS4804 was still originating about 40% of the IP space it normally did, even though the successful pings (blue line) dropped to zero during the outage.

However, if we utilize Kentik’s aggregate NetFlow to analyze the traffic to the IP space of the routes that weren’t withdrawn, we still observe a drop in traffic of over 90%, nearly identical to the overall picture.

From this, we can conclude that, as was in the case of Rogers, traffic stopped going to Optus, not due to a lack of available routes in circulation (i.e. reachability), but due to an internal failure with Optus causing the network to stop sending and receiving traffic.

A Flood of BGPs?

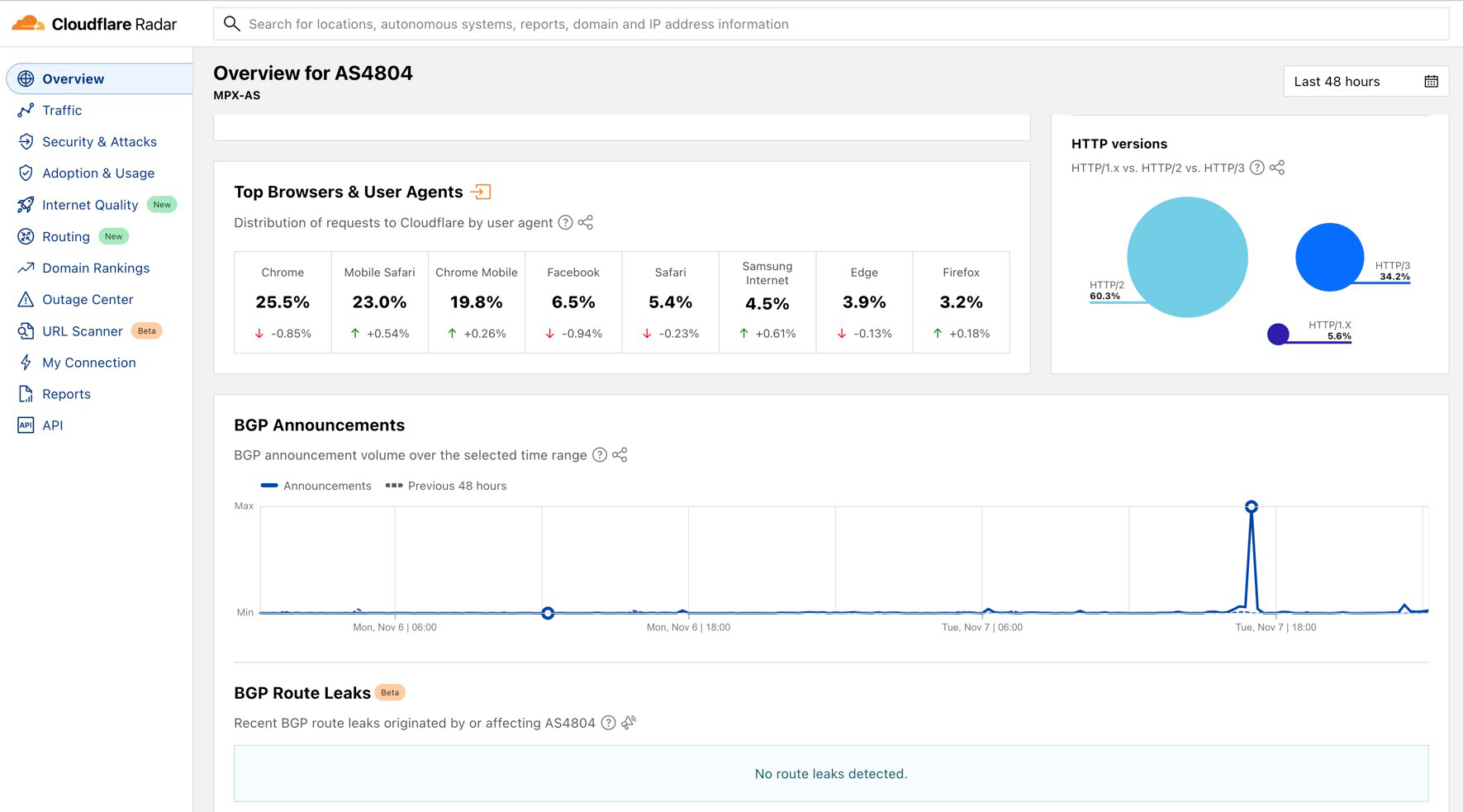

Earlier this year, my friend and BGP expert Mingwei Zhang announced the expansion of the Cloudflare Radar page to include various routing metrics. One of the statistics included on each AS page is a rolling count of BGP announcements relating to the routes originated by the AS.

In the aftermath of the Optus outage, I saw postings on social media citing the page for AS4804, showing a spike in BGP messages at the time of the outage. It’s worth discussing what this does or does not mean.

As you may already know, BGP is a “report by exception” protocol. In theory, if nothing is happening and no routes are changing, then no BGP announcements (UPDATE messages, to be precise) are sent.

The withdrawal of a route triggers a flurry of UPDATE messages as the ASes of the internet search in vain for a route to replace the one that was lost. The more routes withdrawn, the larger the flurry of messages.

This includes both messages that signal a new AS_path or other attribute, and messages that signal that the sending AS no longer has the route in its table. Let’s call these types of UPDATE messages “announcements” and “withdrawals,” respectively.

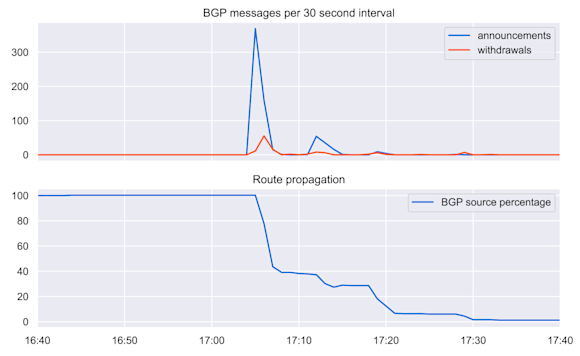

Perhaps surprisingly, when a route is withdrawn, the number of announcements typically exceeds the number of withdrawals by an order of magnitude. If we take a look at the plight of the withdrawn Optus prefix from earlier in this post (49.2.0.0/15), we can see the following.

The lower graph tracks the number of Routeviews BGP sources carrying 49.2.0.0/15 in their tables over time. The line begins a descent at 17:04 UTC when the outage begins and takes more than 20 minutes to be completely gone. Coinciding with the drop in propagation on the bottom, the upper graph shows spikes in announcements and withdrawals — much more of the former than the latter.

Optus did not break BGP; its internal outage caused it to withdraw a subset of its routes. The spike in BGP announcements was simply the natural consequence of those withdrawals.

Optus and RPKI

First things first: RPKI did not play a role in this outage. But Optus has some issues regarding RPKI that are worth discussing here.

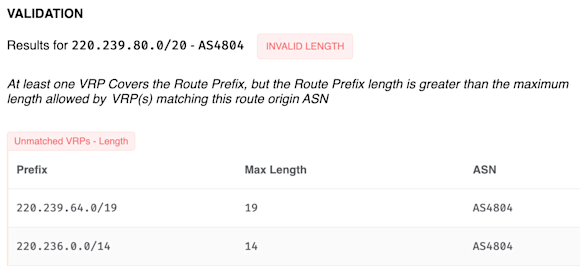

On any given day, AS4804 announces more than 100 RPKI-invalid routes to the internet. These routes suffer from the same misconfiguration shared by the majority of the persistently RPKI-invalid routes that can be found in the routing table on any given day. Specifically, they are invalid due to the maxLength setting specified in the ROA.

Take 220.239.80.0/20, for example, validation shown below. According to the ROA, this IP space is not to be announced in a BGP route with a prefix length longer than 19. Since its length is 20, it is RPKI-invalid and is being rejected by networks (including the majority of the tier-1 backbone providers) that have deployed RPKI.

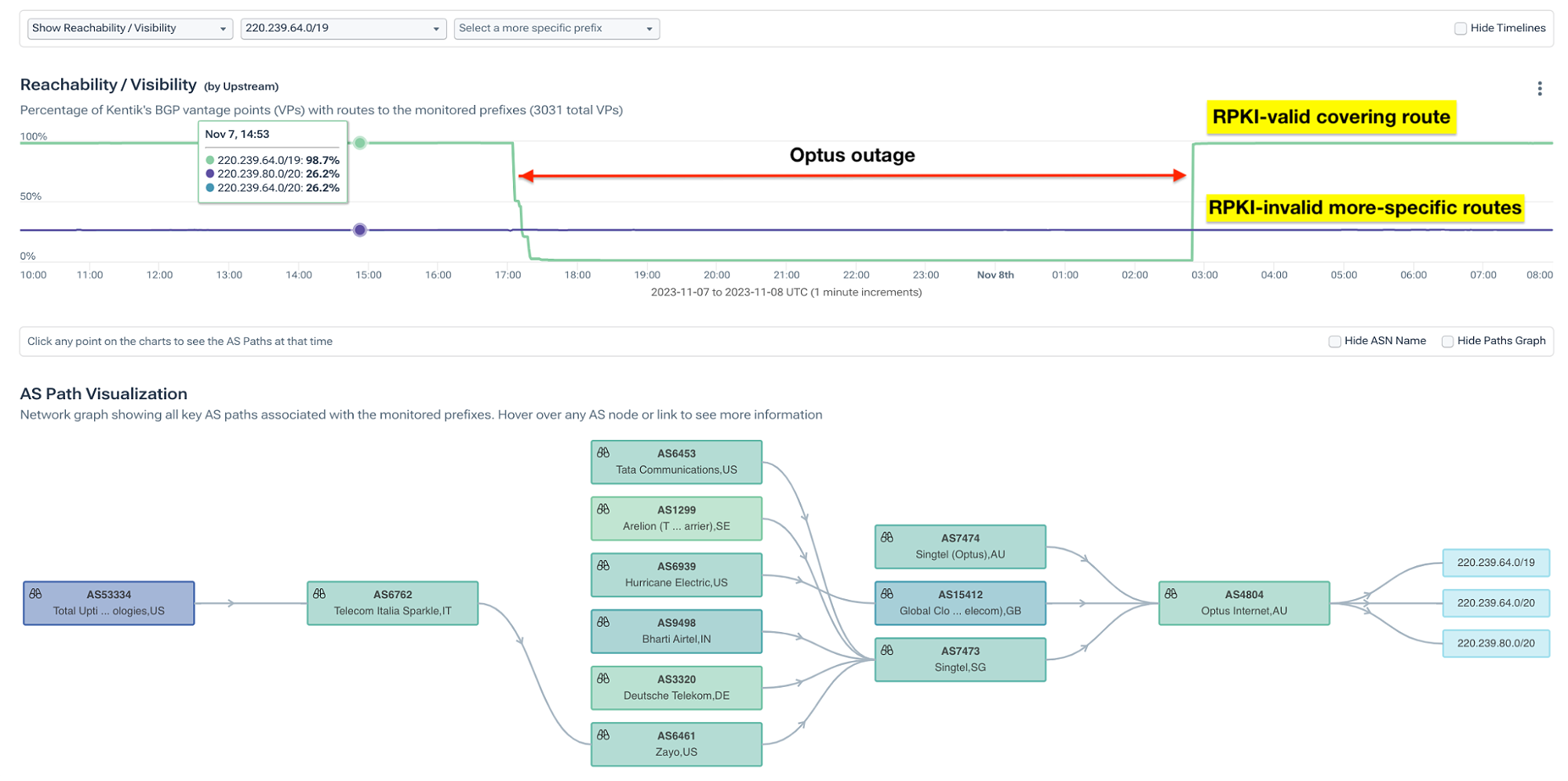

Since these routes are RPKI-invalid, their propagation is greatly reduced. Normally, this isn’t a problem because Optus also announces covering routes, ensuring that the IP space contained in the RPKI-invalid routes are reachable in the global routing table.

However, during the outage last week, many of these covering routes were withdrawn, causing the internet to have to rely on the RPKI-invalid more-specific routes to reach this IP space. This would have caused this IP space to become unreachable for most of the internet, greatly reducing its ability to communicate with the world.

In the example below, 220.239.64.0/20 and 220.239.80.0/20 are RPKI-invalid and, therefore, only seen by 26.2% of Kentik’s 3,000+ BGP sources. The covering prefix 220.239.64.0/19 is RPKI-valid and enjoys global propagation. During the outage, 220.239.64.0/19 was withdrawn and as a result, over 70% of the internet did not have a way to reach 220.239.64.0/20 and 220.239.80.0/20 due to the greatly limited propagation that RPKI-invalid routes typically experience.

The reachability of these routes over time is illustrated in the diagram below.

Ultimately, this did not have a significant impact on the outage. We know this because our analysis above shows that traffic to IP space in routes that weren’t withdrawn also dropped by over 90%.

Regardless, there is a simple fix that Optus can do to resolve this issue. The maxLength setting can either be increased to match the prefix length of the route, or it can simply be removed causing RPKI to only match on the AS origin of AS4804.

To help alleviate the confusion of the maxLength setting, this latter course of action was recently published as a best practice in RFC9319 The Use of maxLength in the Resource Public Key Infrastructure (RPKI).

Conclusion

The multi-hour outage last week left more than 10 million Australians without access to telephone and broadband services, including emergency lines. In a story that went viral, one woman discovered the outage when her cat Luna woke her up because the feline’s wifi-enabled feeder failed to dispense its brekkie.

On Monday, November 13, 2023, Optus issued a press release that nodded at the cause in the following paragraph:

At around 4:05 am Wednesday morning, the Optus network received changes to routing information from an international peering network following a routine software upgrade. These routing information changes propagated through multiple layers in our network and exceeded preset safety levels on key routers which could not handle these. This resulted in those routers disconnecting from the Optus IP Core network to protect themselves.

To me, that statement suggests that an external network (presumably now identified) that connects with AS4804 sent a large number of routes into Optus’s internal network, overwhelming their internal routers and bringing down their network. If that is the correct interpretation of the statement, then the outage was nearly identical to the Rogers outage in July 2022, when the removal of route filters allowed a route leak to overwhelm the network’s internal routers.

Like any network exchanging traffic on the internet, Optus’s network needs to be able to handle the likely scenario that a peer leaks routes to it — even if it is another subsidiary of its parent company. These types of mistakes happen all the time.

Aside from filtering the types of routes accepted from a peer, a network should, at a minimum, employ a kind of circuit breaker setting (known as Maximum-Prefix or MAXPREF) to kill the session if the number of routes went above a predefined amount.

Another possibility is that Optus did use MAXPREF but used a higher threshold on its exterior perimeter than on the interior — allowing a surge of routes through its border routers but taking down sessions internally. When MAXPREF is reached, routers can be configured to automatically re-establish the session after a retry interval or go down “forever,” requiring manual intervention. Be aware that Cisco’s default behavior is to go down forever.

Again, mistakes happen regularly in internet routing. Therefore, it is imperative that every network establish checks to prevent a catastrophic failure. It would seem that Optus got caught without some of their checks in place.

The Australian government announced that it is launching an investigation into the outage. At the time of this writing, we don’t yet know if Luna will be called to testify.