If Your Network Traffic Is Continuous, Why Isn’t Your Synthetic Testing?

Summary

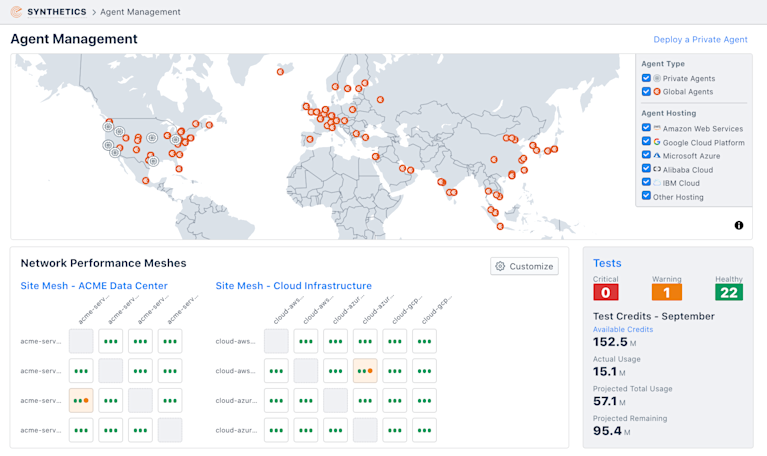

In this post, we show how Kentik Synthetic Monitoring supports high-frequency tests with sub-minute intervals, providing network teams with a tool that captures what’s happening in the network, including subtle degradations.

Reliable networks are the sine qua non of modern business using digital technologies, clouds, and the internet to build and deliver services. In other words, if the network is not doing its part, success becomes unattainable.

Synthetic monitoring was adopted to fulfill the need to achieve some level of confidence that the network is delivering a good experience. By actively performing tests at regular intervals, IT teams can get a headstart to detect and address problems proactively and build a foundation for quality assessment monitoring metrics, such as latency, jitter, and packet loss.

If the performance measurements reach unacceptable levels, it is straightforward to conclude that the network is at risk of not supporting a good digital experience. On the other hand, if the metrics collected don’t show degradation, how confident can IT teams be that the digital experience is OK? To answer this question, one must first understand the effectiveness of the tests performed. Are they being run frequently enough to capture events impacting your traffic?

Legacy solutions that test infrequently are blind to subtle degradations

Here is where legacy synthetic monitoring solutions fail. As a general rule, the less frequent the test, the less effective it is in catching subtle changes in performance. Due to a combination of product limitations and cost inefficiencies, legacy synthetic monitoring solutions keep test intervals in windows of units or tens-of-minutes, making testing too sparse to be meaningful.

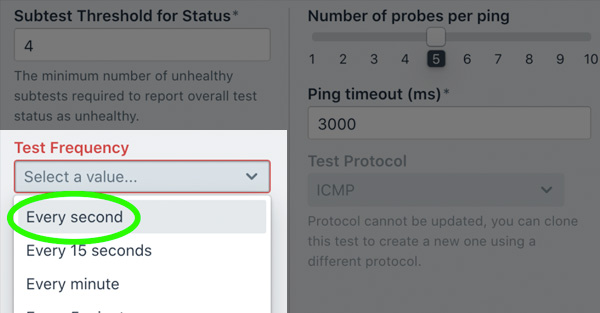

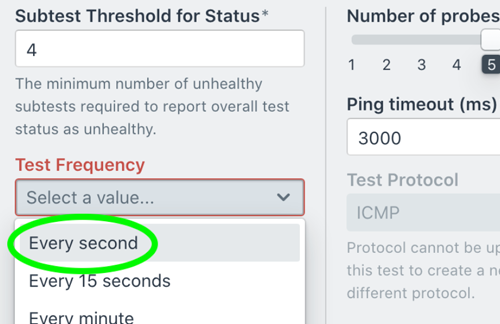

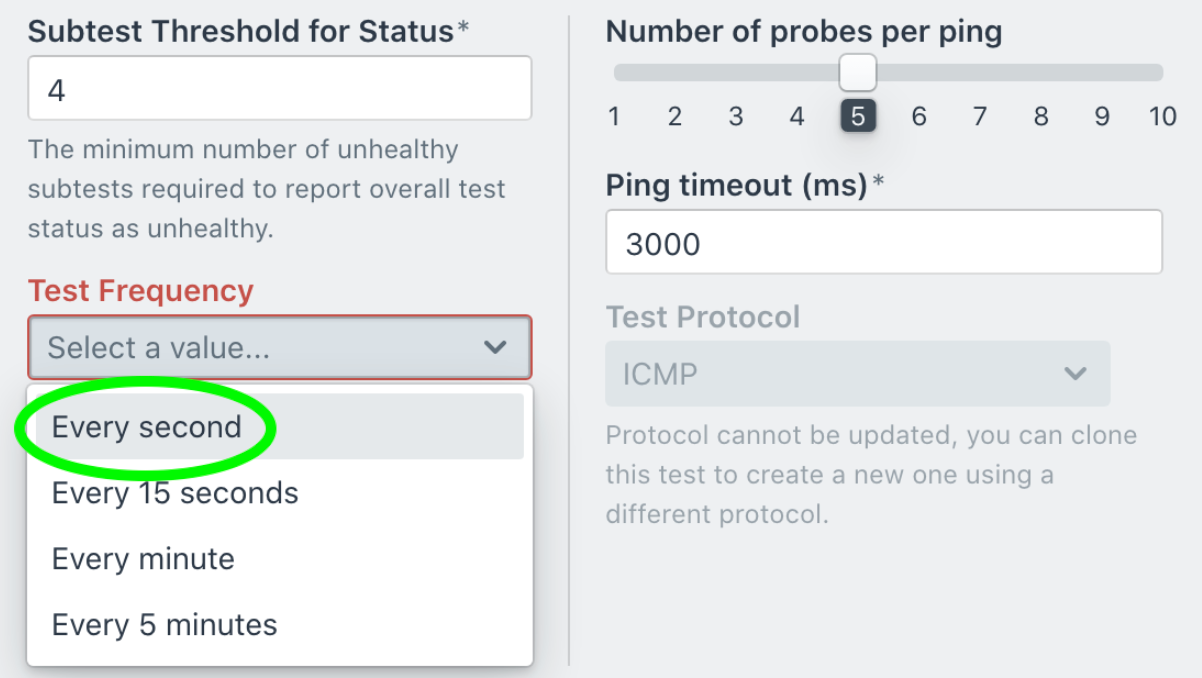

Monitoring network performance is fundamental to assessing digital experience and service quality. Testing frequency is a primary factor in ensuring that synthetic monitoring provides meaningful insight into the network’s reliability and digital experience quality. So, when we designed Kentik Synthetic Monitoring, we made sure that it can support high-frequency tests with sub-minute intervals providing network teams with a tool that can capture what is going on in the network, including subtle degradations.

Continuous testing is also vital to improving MTTR and digital experience

Lack of sub-minute test windows was a recurrent complaint we received from companies using legacy synthetic monitoring solutions. Especially those running applications that are very sensitive to performance degradation.

It is fair to say that network performance has become paramount to the digital experience provided to the users and very hard to control and predict. Network performance is often impacted by traffic or routing dynamics, making performance conditions a fast-moving target and a daunting challenge for quality assurance.

Testing the network frequently increases the chances of knowing exactly where and when a threshold was crossed. Short intervals are especially important when dealing with intermittent issues. By providing testing frequency options that go down to per-second tests, Kentik added significant value to IT’s ability to troubleshoot with precision and speed — even those short events hard to detect and identify.

Higher trust in the network also means more cost-effective business models.

When we announced support for per-second tests, we immediately heard of companies’ interest in using automation frameworks. And different from what one may think, network reliability is not only a determining factor for technical aspects, it is also an important factor for the overall business cost model.

Taking the example of automation. It relies on constant communication between the control plane application and the clients executing the tasks. Instructions are sent continuously in real-time. Any latency or loss peak event must be identified for the network performance to be more accurately measured and profiled, allowing the overall system to be better planned. The more reliable the communication between control and execution, the less redundancy and safe-range the overall system requires.

Our customers and prospects told us that more accurate monitoring of network performance would allow them to architect more efficiently, impacting the business, including location and grid concentration viability.

Synthetic monitoring without compromises

When you monitor infrequently, you are, in fact, back to blind conditions. So, Kentik wanted to provide customers with a synthetic solution that would allow them to monitor network performance as frequently as they need to derive real value.

Synthetic monitoring is tainted by solutions that are not effective because they force a compromise in what to test and how frequently. Cost-primitiveness is a top issue even for large testing intervals; what to say about going sub-minute? Organizations just “police” their monitoring rather than concentrating on what needs to be done.

Kentik is committed to providing network observability that customers can rely on and afford. Kentik’s next-gen synthetic monitoring brought to market a solution without the usual test frequency and cost compromises.

With Kentik Synthetic Monitoring, not only can IT teams define high-frequency tests — e.g., per second — the pricing model permits it. Organizations can now perform continuous network performance monitoring to ensure they stay on top of digital experience, detecting even subtle intermittent degradations impacting their traffic, thus achieving higher assurance of the network services’ quality and reliability.